React and other JavaScript frameworks made app development and website building a much faster process. But for SEO, it’s a real challenge — pages get indexed slower, and part of the content might not be visible to search engines at all without proper technical setup and internal linking.

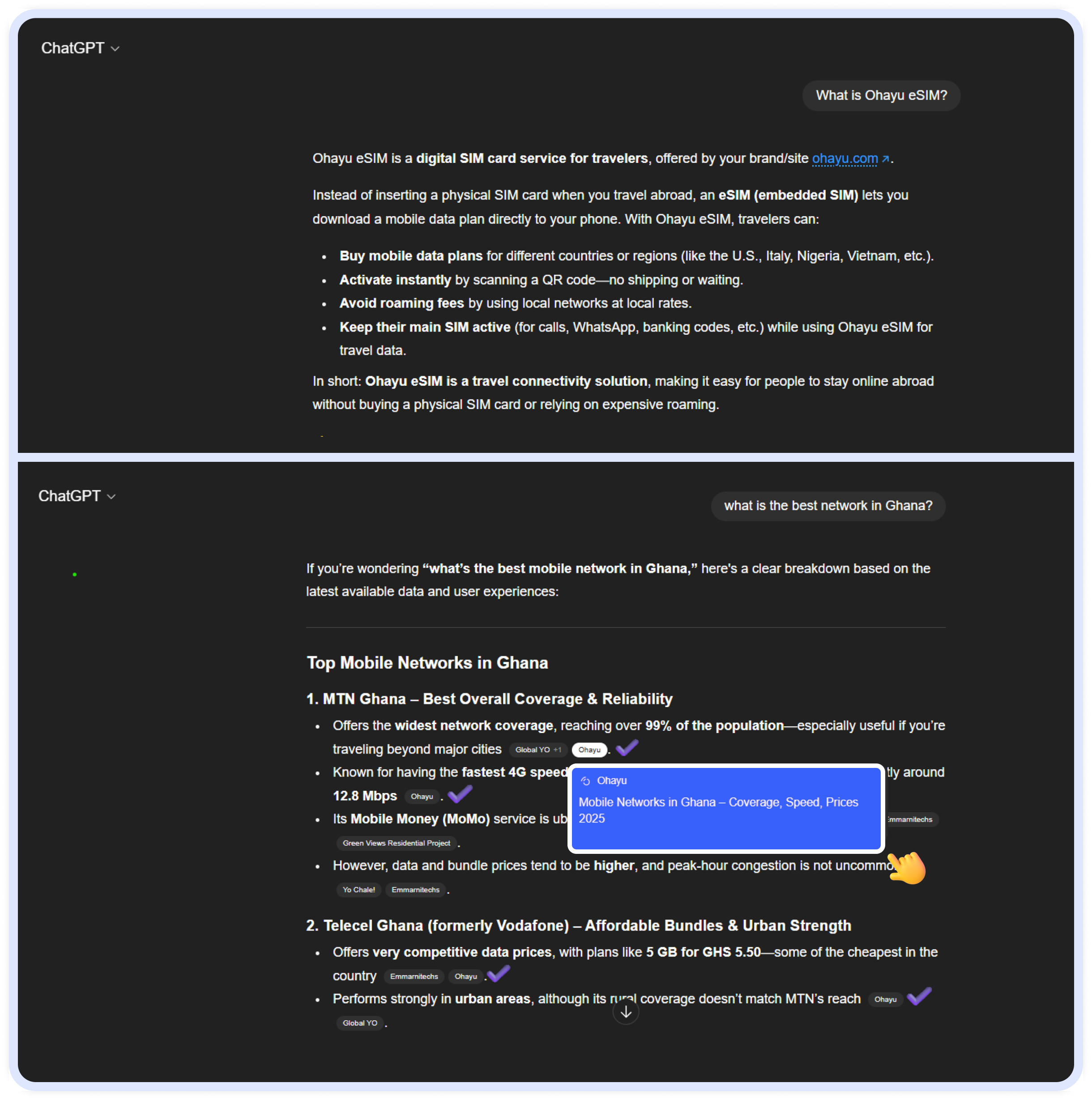

Now there is an AI (artificial intelligence) factor kicking in. And it’s not only Google or Bing that are trying to crawl your site, but also ChatGPT, Perplexity, and other AI systems. To correctly scan, index, and rank it, they need a clear structure and clean HTML (which in SPA sites is only rendered on the client side). So how do you make them index your JavaScript, which AI bots can’t even “see” and process?

The author of this case is Allison Reed, SEO Strategist at Ohayu — a company that created its own eSIM and provides affordable mobile internet for international travelers. In this article, I’ll share our experience with SEO for a React-based JavaScript site and explain what owners of single-page apps should do if they want to get indexed by traditional search engines and generative AI systems.

SSR or SPA - What Is the Difference?

We all know pretty well how to do SEO on SSR (server-side rendering) websites. Most specialists actually cut their teeth working with WordPress, Shopify, or website builders. The page content is static, created on the site server, and is sent in exactly the same way to all real visitors and search engine bots.

Example: check any page on the Collaborator blog, like a lovely article by Oleksandra Khilova https://collaborator.pro/blog/link-building-startups, inspect the elements using inspection tools, and then try to find the same HTML tags as in the page source: https://collaborator.pro/blog/link-building-startups

You will see that the HTML is basically the same. Search engine bots and AI bots will easily access and crawl this content. This is how SSR usually looks.

But there are also SPA (single-page application) JavaScript sites built with frameworks like React, Vue, or Angular, which have a completely different logic. In fact, they work very much like mobile apps. The pages are rendered only in the browser, which means that when a search engine bot visits, during the first hit, it might only see an empty JSON template with scripts (same for every website page), not the actual content. This makes indexing and ranking more complicated.

Example: check the page https://ohayu.com/esim/united-states-us/, inspect the SPA page elements in the browser, and you will see that all headings and content are in place. But if you view the source using view-source:https://ohayu.com/esim/united-states-us/, it will give you just a default script with no content.

This is typical SPA behavior. Such sites are usually very flexible and dynamic:

- easy to run A/B tests;

- make bulk changes;

- personalize the user experience;

- share the same backend between the app and web versions.

But this very dynamic principle is what creates problems for search engine optimization.

If we compare both technologies to something more familiar, a regular SSR site works like a printed book: you open a page, and the text is already there. Everything is visible both to the user and the search engine; all the pages are in place.

An SPA is more like an e-book, where the content appears only when the user interacts with it. For the user, it looks fine, but for Google or other search engines, the page can look empty during the first visit.

That’s why, for SPA, you need additional solutions, so search engines can crawl the dynamic pages of a JavaScript site as easily as people do.

Search Engines and JavaScript SPA Sites

To understand why dynamic pages don’t work well for search engines, let’s look at the basics using Google as an example.

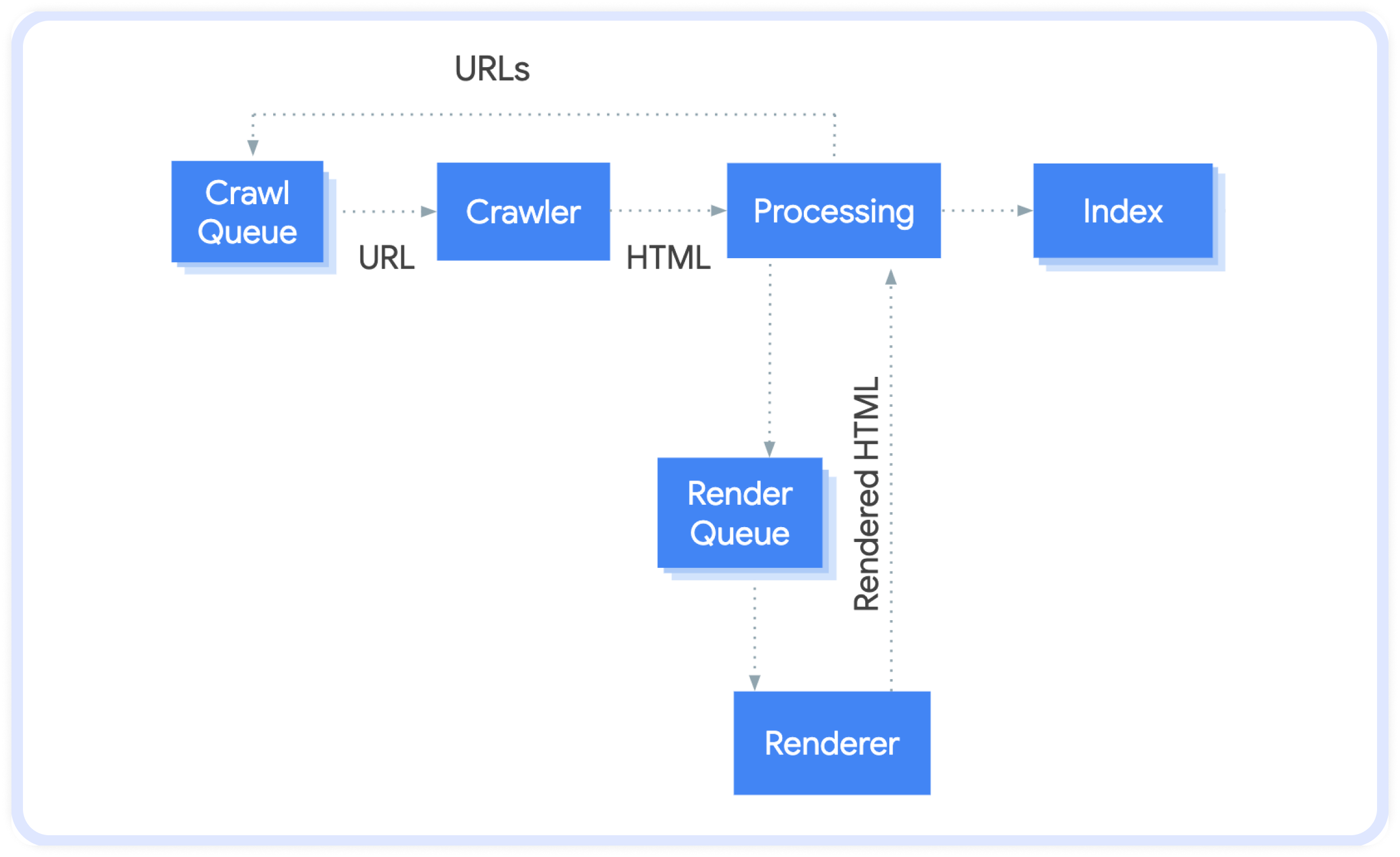

Google Search has three main stages of working with any site:

- Crawling — the bots follow links and collect the data on visited pages (check a simple explanation in How Google Search works to refresh your knowledge).

- Indexing — the search engine analyzes the received content, structure, keywords, and media, and adds the page to its database.

- Ranking — when a user types a query, Google selects the most relevant pages from the index and sorts them by multiple factors.

Image source: https://developers.google.com/search/docs/crawling-indexing/javascript/javascript-seo-basics

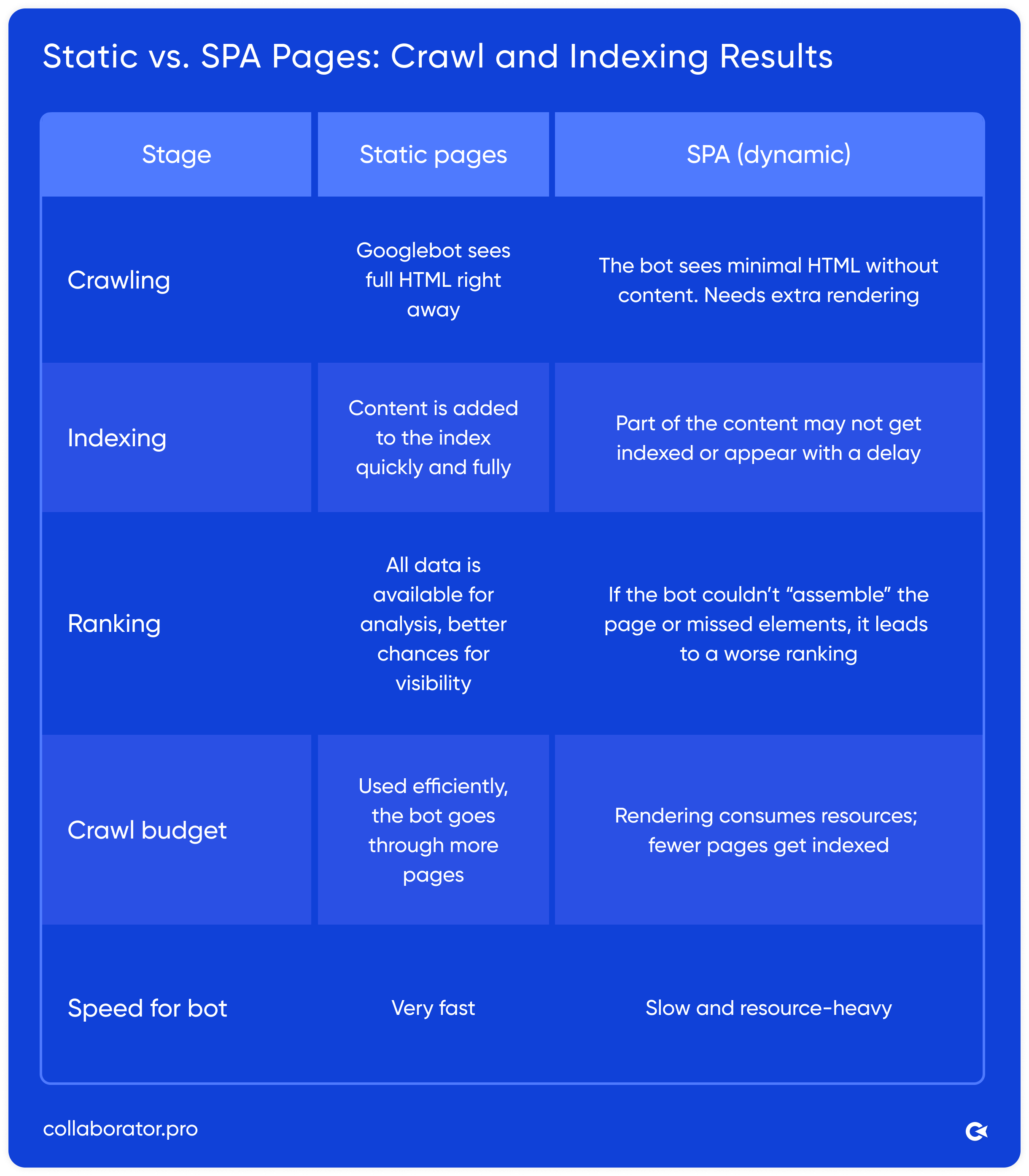

Why Does Google Like Static SSR Pages More?

Static pages immediately give back ready HTML with all the content. That’s fast; the bot doesn’t waste resources on rendering JavaScript.

Dynamic pages (SPA on React, Vue, Angular) return minimal HTML, and the main text and blocks are generated in the browser. To process the actual content, Google has to run an additional process — rendering. It’s expensive, slow, and not always successful.

That’s why:

- Static pages get indexed faster.

- Content is almost always fully processed.

- Search engine bot spends less crawl budget (a limited resource that defines how many pages Google is ready to crawl on your website within a set period of time, see JavaScript SEO basics).

Here’s a simple comparison table:

Simply put, Google is like a librarian. If you bring him a ready-printed book (a static page), he puts it on the shelf right away. But if you give him a constructor kit of empty pages with instructions (SPA), the librarian has to assemble the book himself. And there’s no guarantee he will finish it.

Google says it can process JavaScript, but in practice, SPA rendering often lags or doesn’t happen at all — because of large bundles, client-side navigation, and crawl budget limits.

At this point, Google is the only search engine that can somewhat handle JavaScript indexing. The rest struggle a lot, for example:

- Bing — JavaScript support is improved, but complex SPAs are unstable.

- DuckDuckGo and Yahoo — both rely on Bing’s index, so they have the same limits.

- Baidu, Naver, Seznam — basically don’t index dynamic content at all.

- AI platforms like ChatGPT, Claude, and Perplexity don’t render JavaScript at all.

- Meta, LinkedIn, X, and other social platforms — don’t render JavaScript either.

Conclusion: if we want the site to be indexed properly and accessible to AI bots and social networks, we need extra technical solutions. Next, I’ll show what exactly we’ve already implemented and continue to work on at an SPA website.

SPA + SSR Setup

The setup of the Ohayu eSIM website consists of two technically different parts:

- Main page and country pages — React-based SPA (dynamic).

- Blog — WordPress-based SSR (static).

At the initial stage of product development, the website consisted of 200 SPA-based pages. And without extra fixes, during the first 6 months, Google indexed fewer than 50 pages (<25%). It resulted in fewer than 10K monthly impressions, Open Graph snippets didn’t work, and the site was completely invisible to Bing or LLMs.

Realizing how problematic this setup was, we started making changes in the SPA and, at the same time, started a WordPress blog on the same domain (routed to /blog/) to build traffic and compare results between SPA and SSR.

Stage 1: Technical and On-Page SEO Tasks

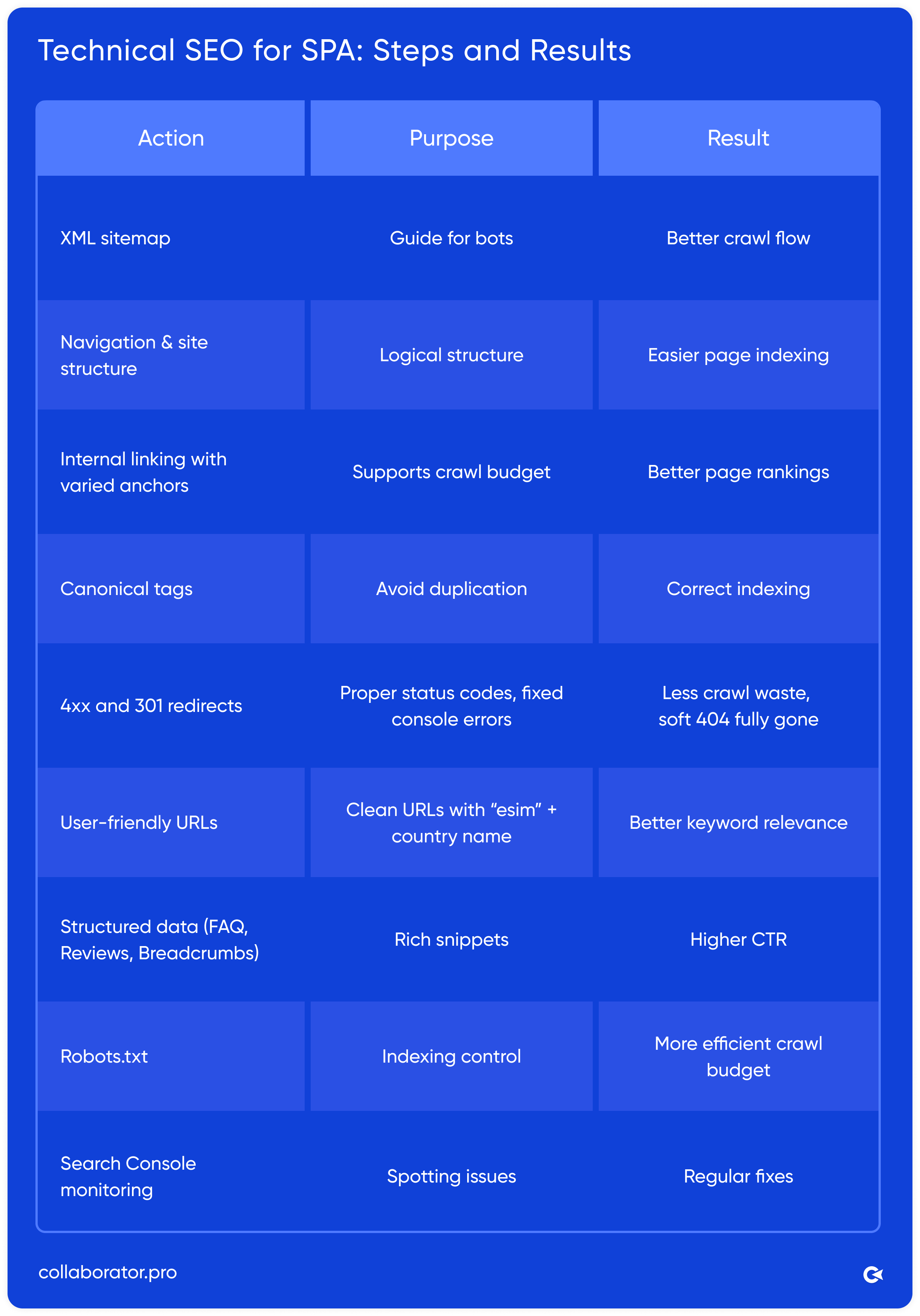

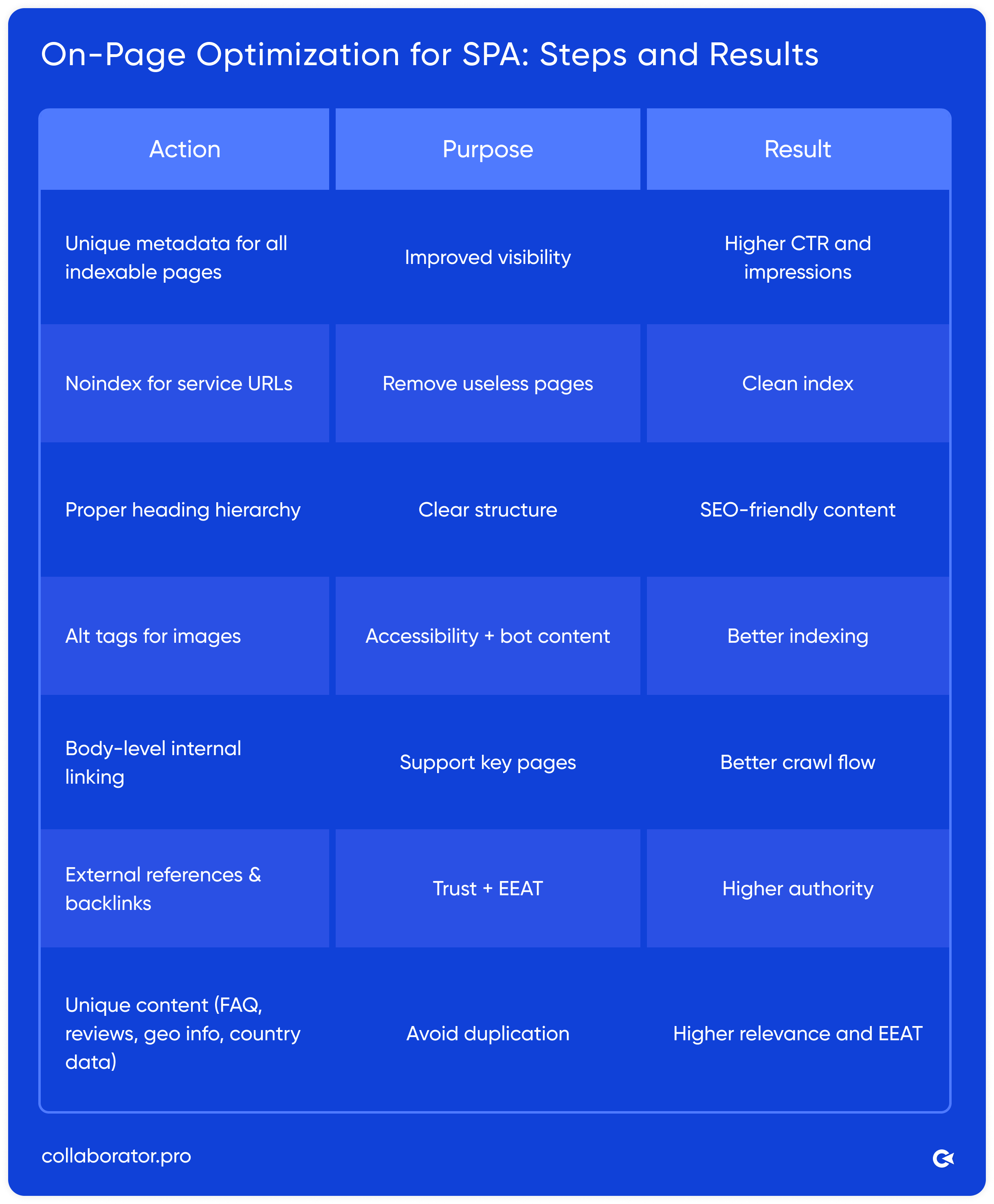

We split basic SPA optimization into two directions: technical SEO and on-page optimization.

Technical SEO for SPA

On-page SEO for SPA

This checklist works for any SPA and is the basic step without which content simply won’t be indexed effectively. After completing the main technical fixes and content optimization, we moved on to the next stage — prerendering.

Stage 2: Prerendering for SPA

To solve indexing issues and expand keyword reach, we connected Prerender.io (April 3, 2025). This service caches SPA pages as static HTML, which makes it easier for search engines to crawl and index content.

We did the following.

- Connected Prerender to the SPA.

- Sent cached HTML versions to Googlebot, Bingbot, and others.

- Checked logs regularly to make sure bots received prerendered pages.

- Tested indexing speed and content visibility in SERP.

We decided to run the prerendering protocol only for search engines, social media, and AI bots. This helped us continue product A/B testing and personalization, because users continued to receive a dynamic version of the site.

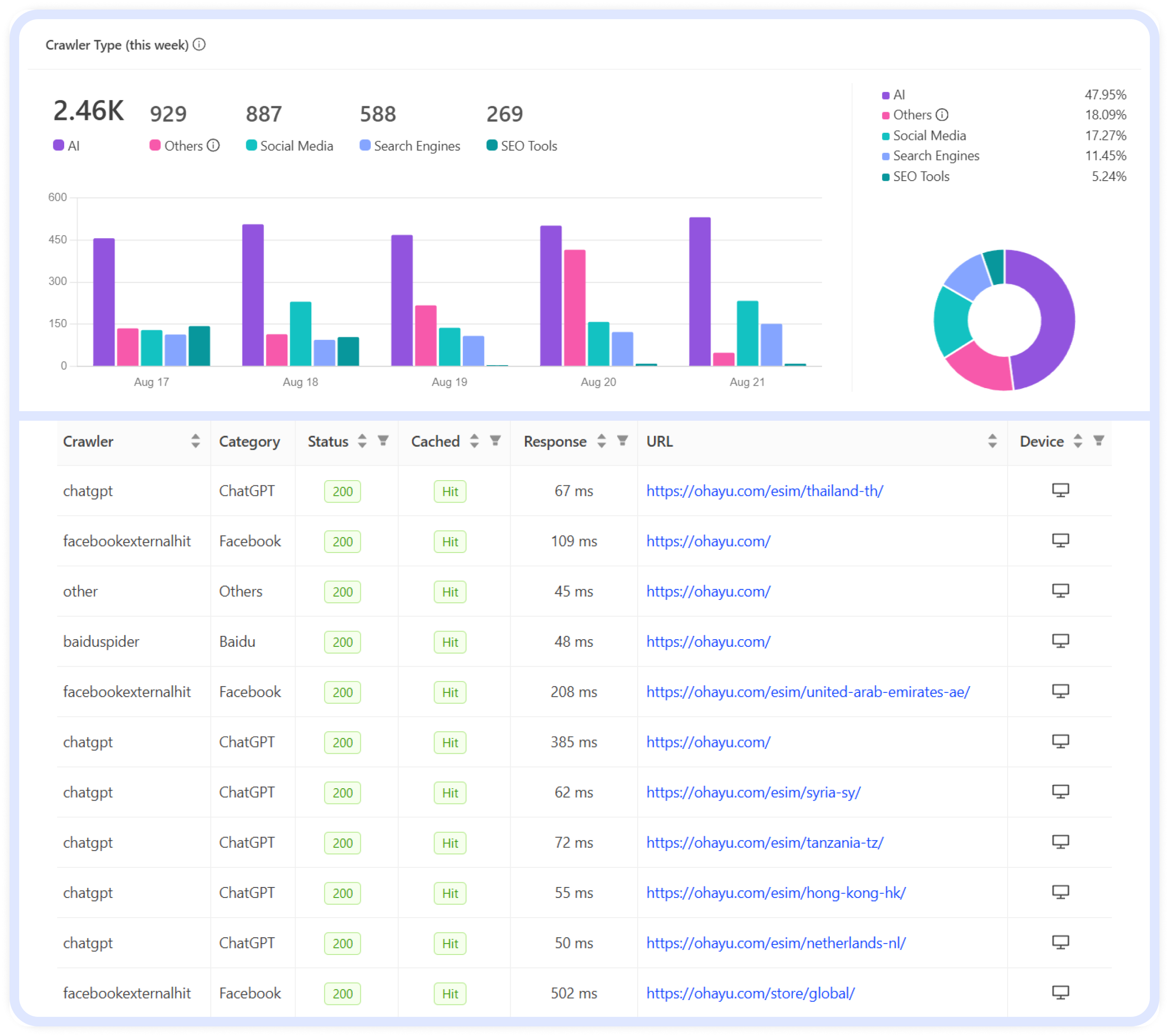

In the screenshots, you can see that after installing prerendering, AI bots started actively scanning the site (47.95% of all requests), followed by social networks (17.27%) and, of course, search engine bots (11.45%).

Results:

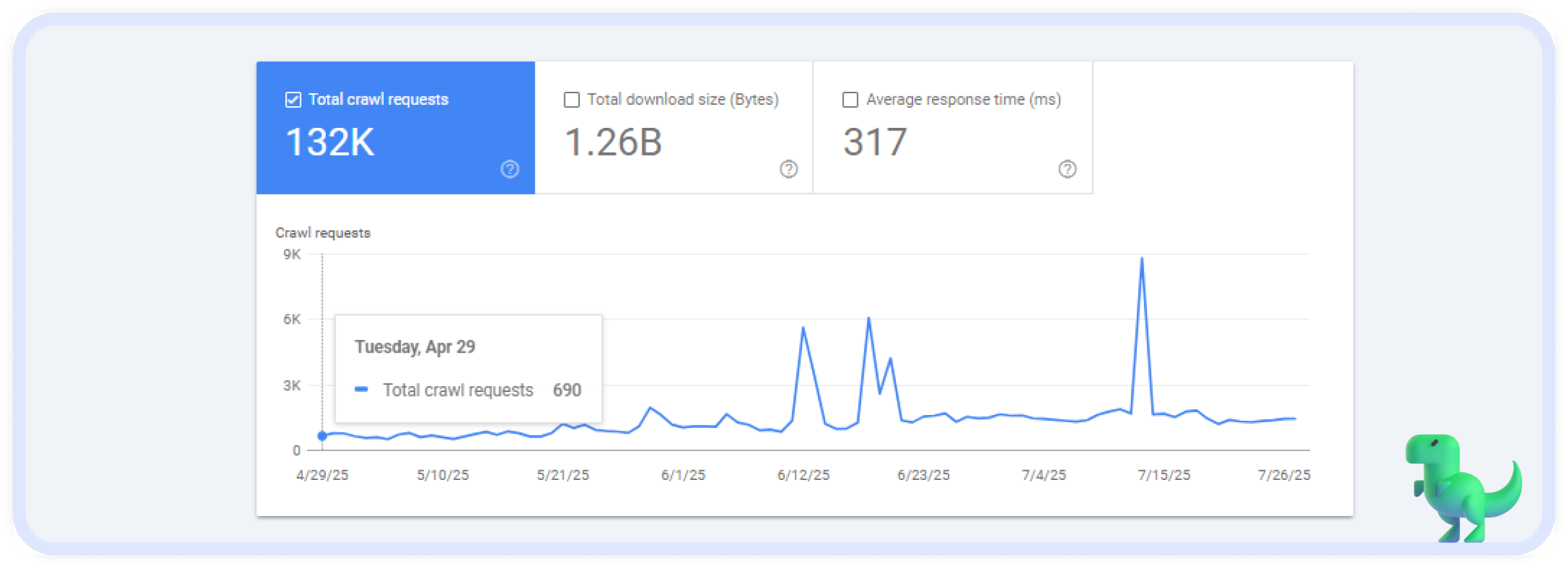

- Indexing improved from <25% to ~80% of country pages.

- Google crawler budget increased from ~600 to 1400 pages per day according to Google Search Console.

- Impressions increased by more than 5x.

- Open Graph metadata started working correctly on social media.

- Bing and Yandex picked up and indexed the content.

- The content became accessible for ChatGPT and other LLMs.

At the same time, we kept the WordPress blog active. This way, we could compare SPA + prerender results with traditional SSR performance.

Stage 3: Results and Comparison (SPA + Prerender vs WordPress SSR)

We ran a WordPress blog alongside an SPA to stabilize and grow organic traffic.

- ~80 posts published.

- 5000 clicks per month.

- ~1 million impressions.

- Average Google position — 13.9.

- Regular appearance in AI overviews.

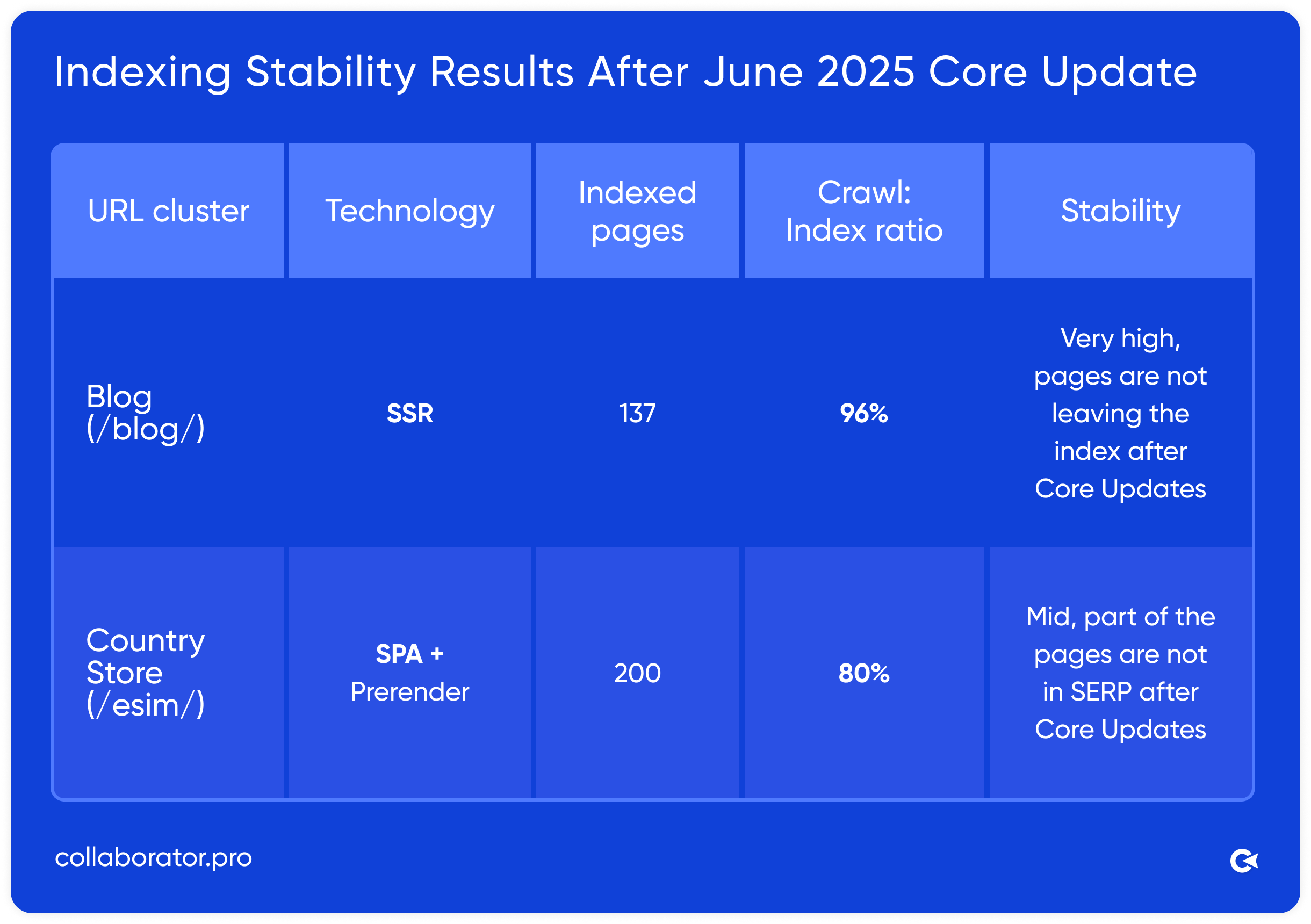

Regular blogging on the same domain helped us compare two technologies side-by-side and monitor indexing stability after Google Core Updates. So, after another algorithm update in June 2025, some of the site’s pages fell out of the index. We quickly analyzed the URL clusters and saw the following.

*Crawl:index ratio is the ratio between the number of pages that the search engine crawled, divided by the number of pages that actually made it into the index. If the indicator is high (for example, 80-90%), then most of the pages that the bot sees are accessible and of sufficient quality to be in the search.

In fact, this is an indicator of indexing efficiency: it shows whether the crawl budget is converted into visibility in the search results.

In our case, the table shows that SSR provides more stable indexing of pages (96%), even after Google Core Updates. The SPA setup is still more sensitive to algorithm changes, even with the use of prerendering.

After 3 months of experiments, we collected enough data to compare both approaches:

SPA (React site) + Prerender:

- Pages got indexed, but not always consistently.

- Caching worked fine, but fresh content still appeared in Google with delays.

- Some structured data was ignored or only partially processed.

SSR (WordPress blog):

- Pages indexed quickly and reliably.

- Structured data (FAQ) was fully recognized.

- Internal linking improved crawl efficiency.

- Content started ranking for long-tail keywords within a few hours (IndexNow submission).

Key takeaway: prerender helped temporarily, but it wasn’t a full solution. For stable long-term growth, we need SSR or hybrid rendering.

SEO Recommendations for JavaScript SPA Projects

Our experience with Ohayu has shown that without SSR or prerendering, any React-SPA site will lose visibility, even if the content is high-quality. Finally, I want to give some advice to colleagues, developers, and business owners who will deal with React sites.

1. Do not hesitate to use prerendering tools - we can recommend Rendertron, Prerender.io. It ensures bots at least see the content, even if indexing isn’t always stable.

2. As an alternative, use SSR / hybrid rendering in projects - Next.js, Nuxt.js, Remix - this is an approach where pages can be generated in advance (as in SSR) and at the same time updated at a certain interval without a full rendering for each request. This option is often considered the "golden mean", because it provides speed and stability of static content, and at the same time allows you to maintain data relevance without a large load on the server. Within one project, you can distribute technologies according to the goals:

- SSR (dynamic pages);

- SSG (static pages);

- ISR (on-the-fly updates).

3. Always monitor crawling and indexing — GSC, server logs will help you detect indexing issues early and avoid business losses.

4. Strengthen internal linking – especially from high-authority sections (like the WordPress blog) to SPA pages.

5. Content strategy — don’t forget to create unique content, high-quality internal linking, because it’s not enough to just make a page accessible - you need to create content worthy of indexing and ranking.

6. Conduct regular technical checks — canonical, robots.txt, OpenGraph, page speed, 4xx/301

Summary: prerender is a temporary patch. For scalable SEO results, especially in competitive niches, SSR (or at least partial SSR) is the way forward.

Additional resources:

Related reading

- • SEO for Multiregional Websites: A Guide to Google Ranking in 2025

- • High-ROI SaaS Link Building: 20+ Proven Strategies for Sustainable Growth in 2025

- • Case Study: How SEO Drove 24x Traffic Growth for This SaaS Brand

- • Optimize Crawl Budget for SEO and Grow Your Website 3X: Case Study

- • Accuracy of Ahrefs, Semrush, and Similarweb: Which SEO Tool Is Best for Traffic Analysis?

- • What Is Grounding in AI and How to Use It for SEO