Basic SEO Checklist for Self-Promotion

Make sure to apply them on your site. Add your own items as needed.

1. Keyword Research Checklist

1.1. Determine the target audience

- gender, age, marital status

- the place of residence

- education, employment

- financial and social status

- other data

1.2. Find keywords

1.2.1. Brainstorm

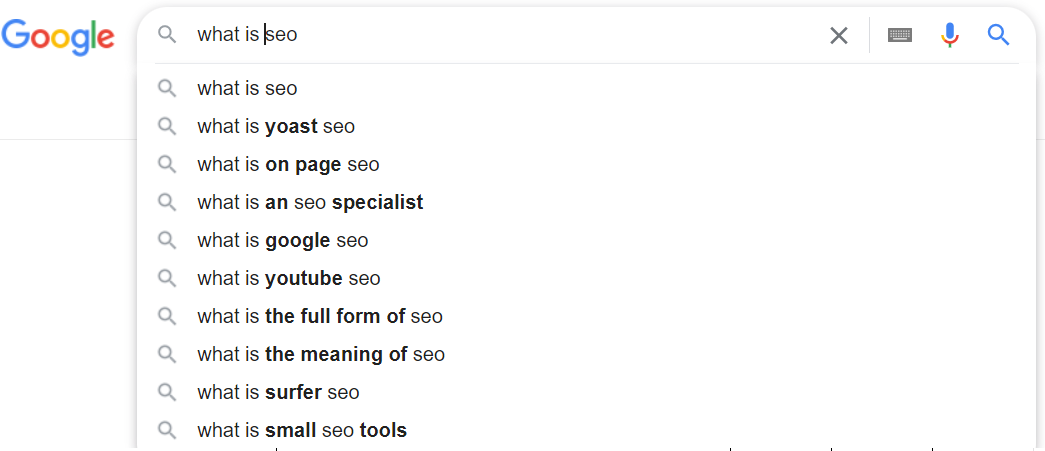

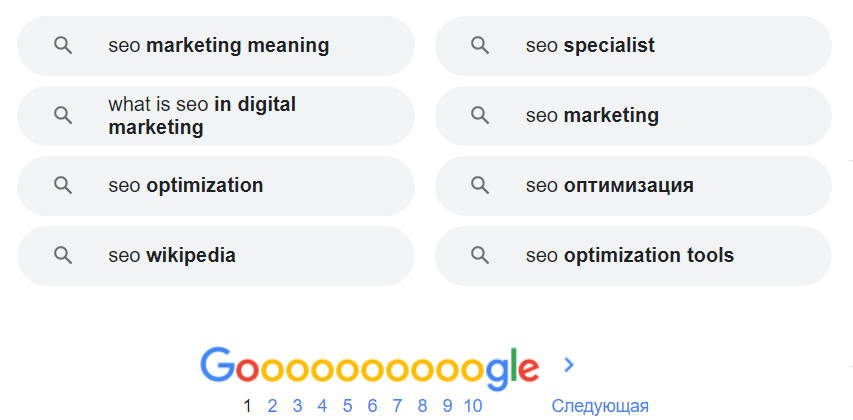

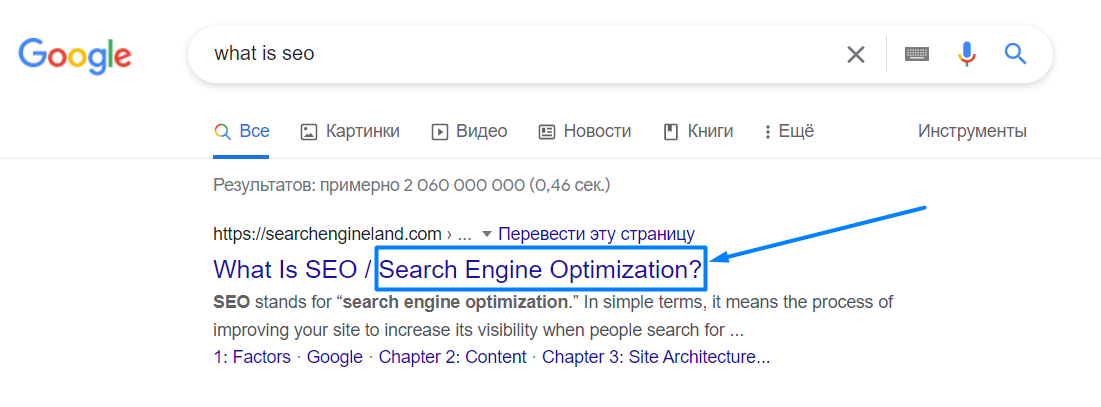

1.2.2. Google Search Suggestions

1.2.3. Statistics and keyword selection services

1.2.4. Site statistics

1.2.5. Webmaster Dashboards

1.2.6. Words used by competitors

- By analyzing html code (phrases in titles, meta tags, content) and text links;

- Browsing open statistics of visits on the sites;

- Using third-party services like Serpstat and Ahrefs.

1.3. Expand the query core

1.3.1. Single-word and multi-word queries

1.3.2. Synonyms and abbreviations

- "notebook"

- "netbook"

- "laptop" or simply "lappy"

- macbook.

1.3.3. Word combinations

- Cheap - … - in Kiev;

- New - Laptops - Asus;

- Used - netbooks - Acer;

- The best - … - from the warehouse;

1.4. Check the key phrases selected through brainstorming and combination. Are users looking for them?

1.5. View the number of search results for selected phrases

1.6. Select promising key phrases

2. Plan or Optimize Your Website Structure

2.1. Compose key phrases

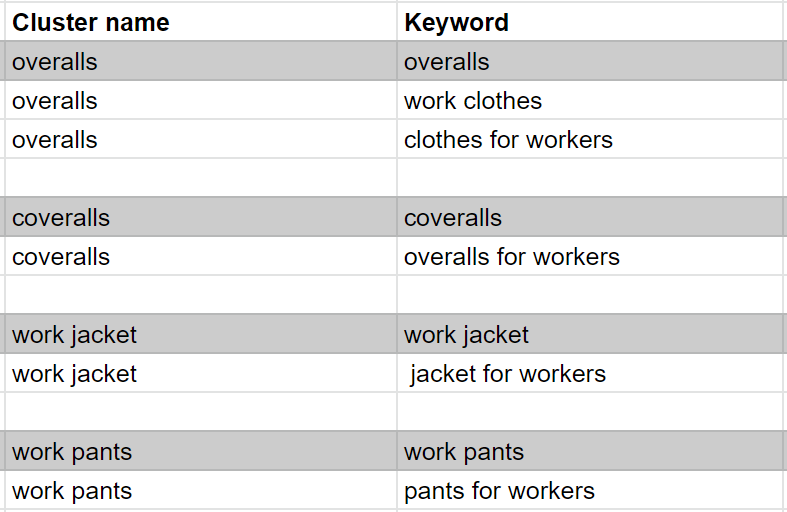

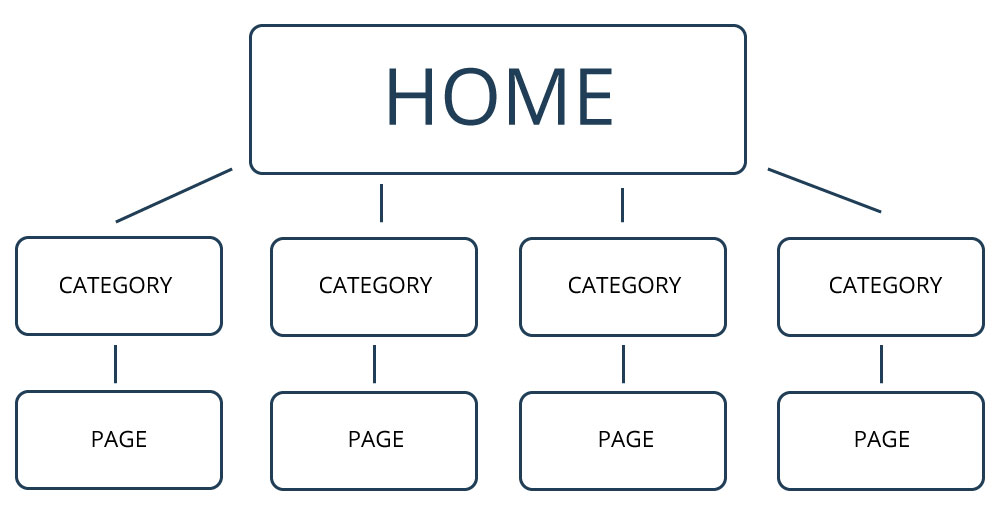

2.1.1. Group keywords into categories and subcategories

2.1.2. Create a schematic tree of the site

- Beds

- Kitchen furniture

- Children's furniture

-

Furniture for the living room:

- Coffee tables

- Wardrobes

- Closets

- Chests

- …

- Dining room

- Cushioned furniture

- …

2.2. Optimize the structure of the site

2.2.1. All navigation links are available in HTML

2.2.2. Any page is available from the main page with a maximum of two clicks

2.2.3. Important sections of the site are linked from the main page

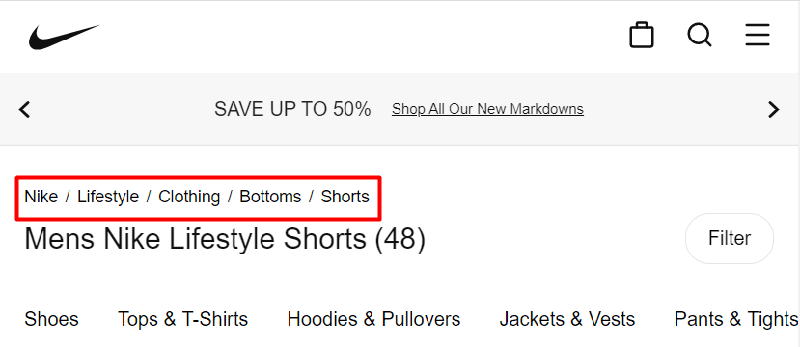

2.2.4. Use bread crumbs

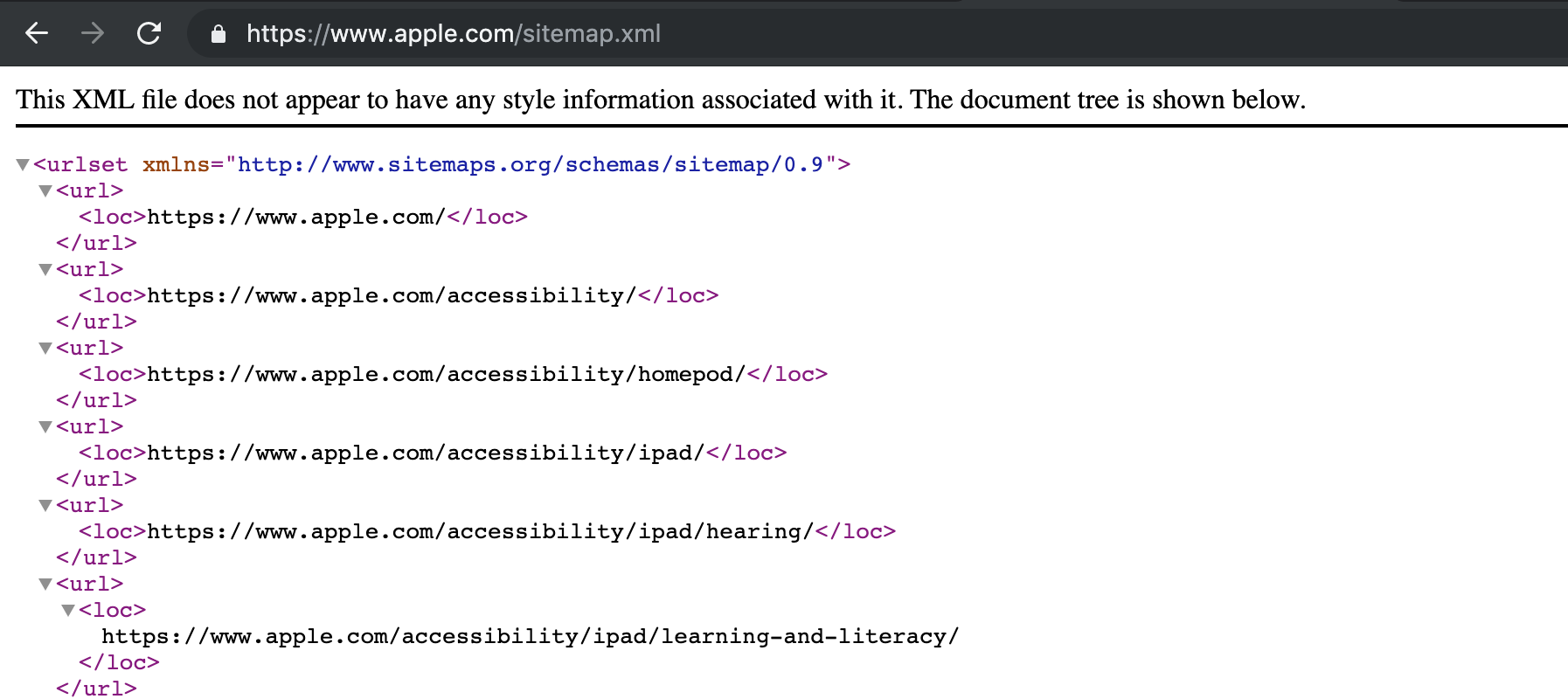

2.2.5. Create sitemap.xml

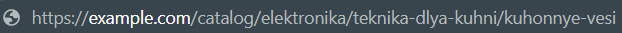

2.3. Optimize page URLs

remove

2.3.1. Reflect site structure in the page address

2.3.2. Friendly URLs for internal page addresses

2.3.3. Small internal page URLs

3. Technical SEO Checklist

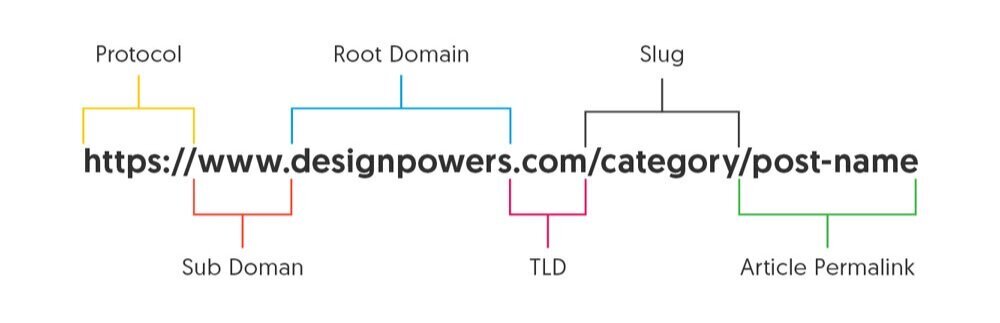

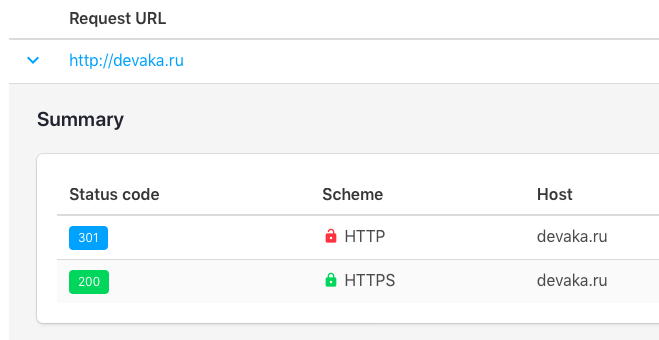

3.1. Configure HTTPS

3.2. Speed up the site

3.2.1. The size of the html code does not exceed 100-200 kilobytes

3.2.2. Page loading speed does not exceed 3-5 seconds

3.2.3. There is no unnecessary garbage in the html-code of the page

3.2.4. Optimize images

3.2.5. Configure caching

3.2.6. Enable compression

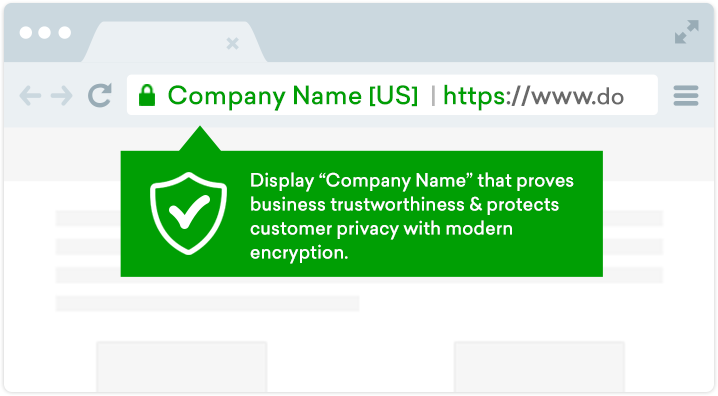

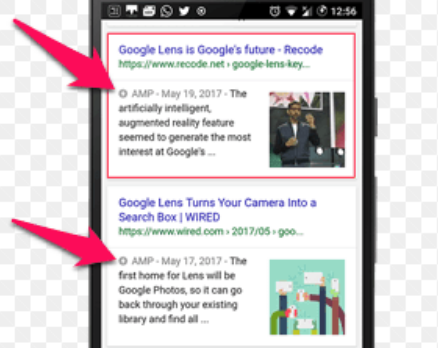

3.2.7. Set up AMP pages

3.3. Optimize site indexing

remove

3.3.1. Good server uptime

3.3.2. Sitemap.xml added to the webmaster panel

3.3.3. Javascript does not contain content that is important for indexing

3.3.4. Missing frames

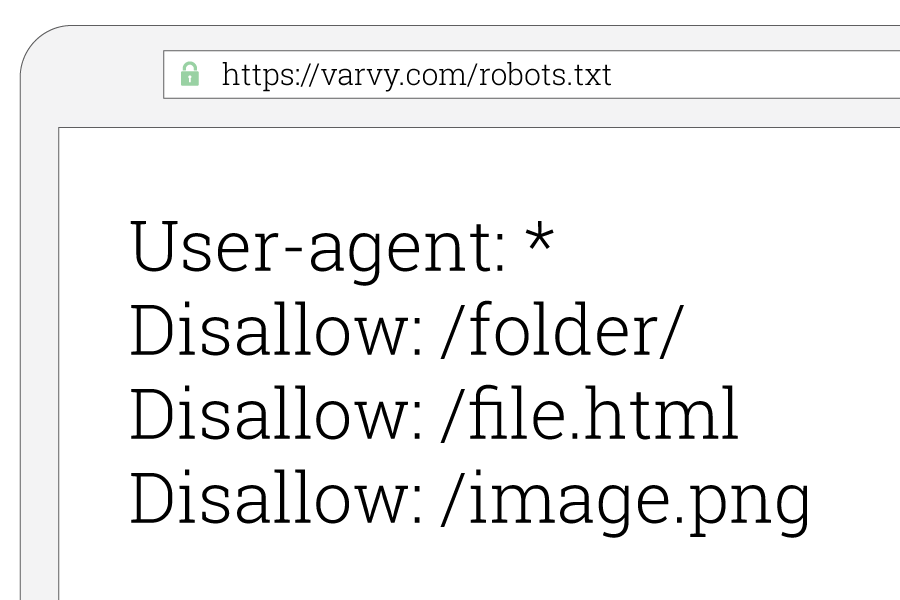

3.3.5. Server logs, the admin panel and subdomains with the test version of the site are closed from indexing

3.3.6. The pages contain the corresponding encoding

3.4. Get rid of duplicates

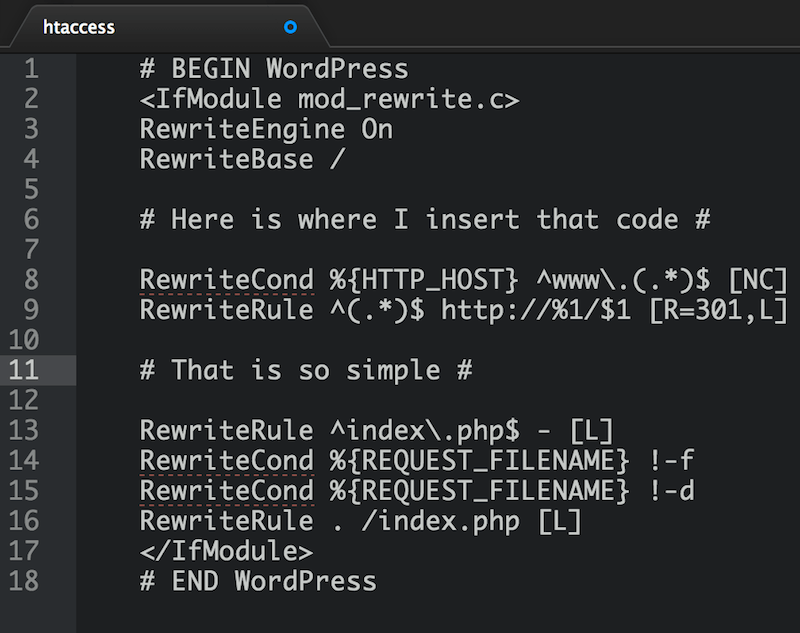

3.4.1. Primary mirror selected (with or without www)

3.4.2. Customized robots.txt file

3.4.3. Home page not available at /index.php or /index.html

3.4.4. Old URLs redirect visitors to new pages

3.4.5. Rel=canonical is used

3.4.6. Non-existent pages give 404 error

3.4.7. Check the site using Netpeak Spider

3.5. Users and robots see the same content

3.6. Reliable hosting is used!

remove

3.6.1. Virus protection is used

3.6.2. Protection against DDoS attacks is used

3.6.3. Backups are configured

3.6.4. Server availability monitoring is configured

3.7. The site is registered in the webmaster's panel

4. On-page and Content SEO Checklist

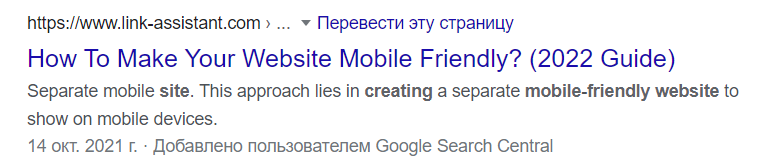

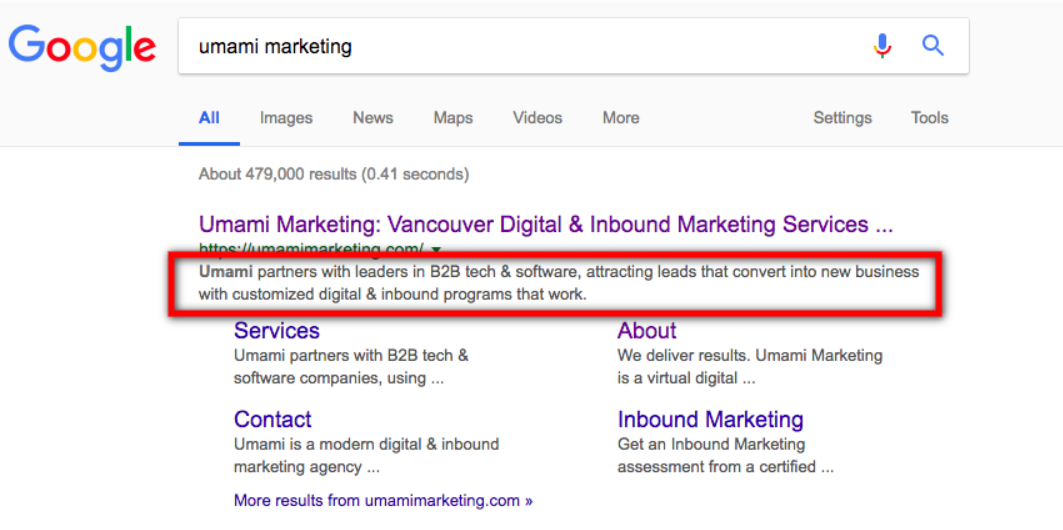

4.1. Optimize headers <title>

4.1.1. Use a short and descriptive title

4.1.2. Display the content of the pages in the title

4.1.3. Make headers attractive to click

4.1.4. Use keywords in title

4.1.5. Insert important words at the beginning of the heading

4.1.6. Title is unique within the network

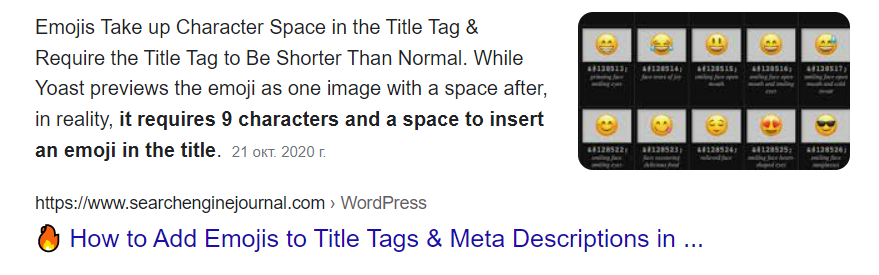

4.1.7. Emojis are used

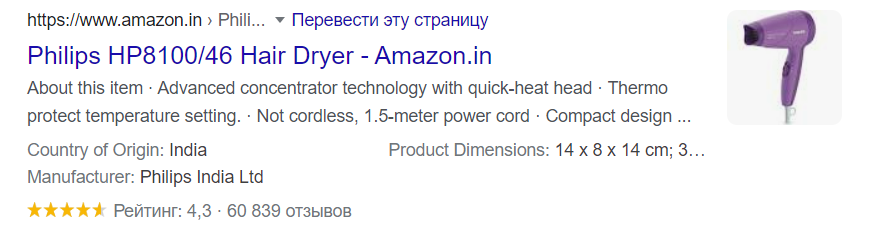

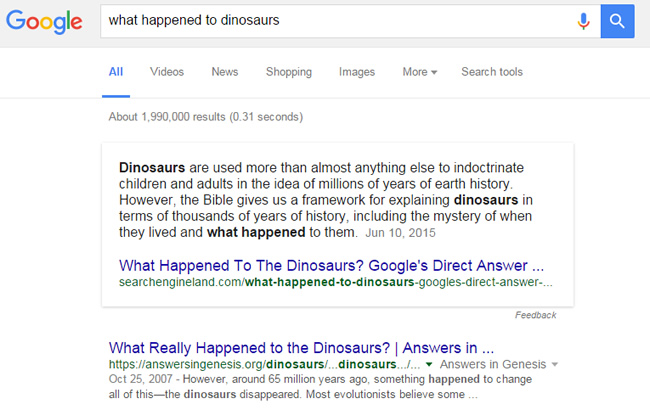

4.2. Optimize snippets

4.2.1. Text in meta description no more than 250 characters

4.2.2. The description is written in such a way that attracts attention and encourages the user to act

4.2.3. The description contains the keyword

4.2.4. Use microdata markup

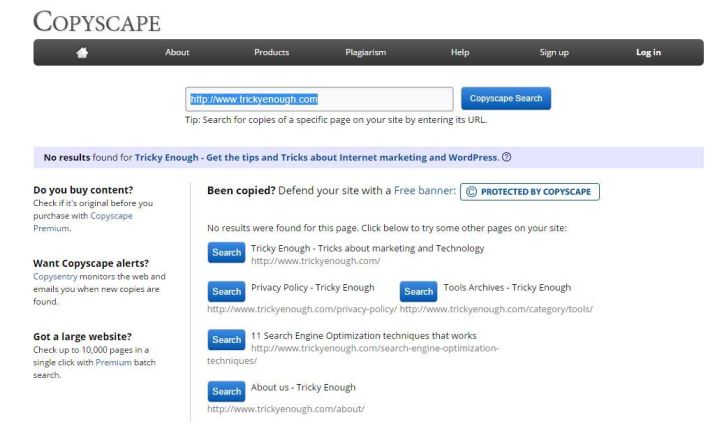

4.3. Optimize content

remove

4.3.1. Use unique content

4.3.2. Format content

4.3.3. Insert keyword phrases in H1-H6

4.3.4. Key phrase occurs in the text

4.3.5. There is no invisible text

4.3.6. There are no duplicate content

4.3.7. Keywords are used in the alt attribute (if there are images)

4.3.8. There is no pop-up ads that cover the main content

4.3.9. The text on the page consists of at least 250 words

5. Off-page SEO Checklist

5.1. Optimize internal links

remove

5.1.1. Pages have at least one text link

- it will be more difficult for them to be indexed in search engines, since robot may not find them;

- they will have a small internal weight, which will prevent their promotion in search engines.

5.1.2. The number of internal links on the page is not more than 200

5.1.3. Keys are used in internal links

- laptop price discount;

- laptop Kyiv cheap.

- buy a gaming laptop;

- a gaming laptop for a child;

- how much does a gaming laptop cost, etc.

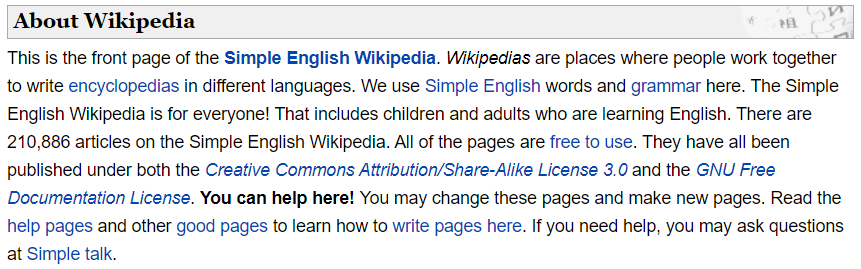

5.1.4. The Wikipedia principle is applied

5.1.5. Primary navigation is accessible to non-javascript crawlers

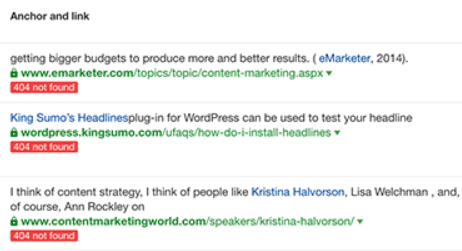

5.1.6. All links work (no broken links)!

5.2. Moderate outgoing links

remove

5.2.1. Control the quantity and quality of external outgoing links

5.2.2. Irrelevant and unmoderated outgoing links are closed in rel=nofollow

5.2.3. Links posted by visitors are moderated

5.2.4. There is no link farm

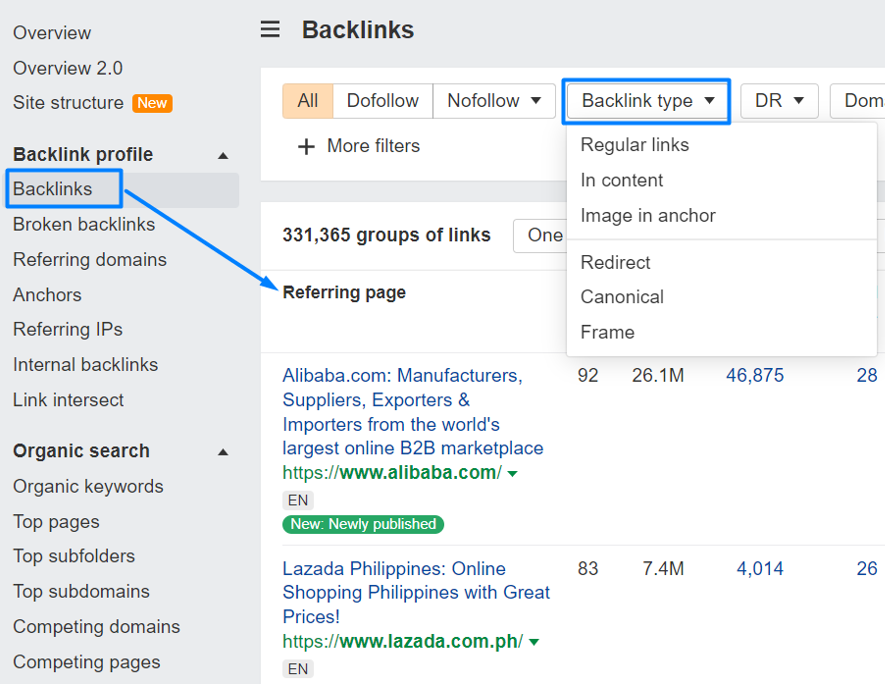

5.3. Post backlinks to the site

remove

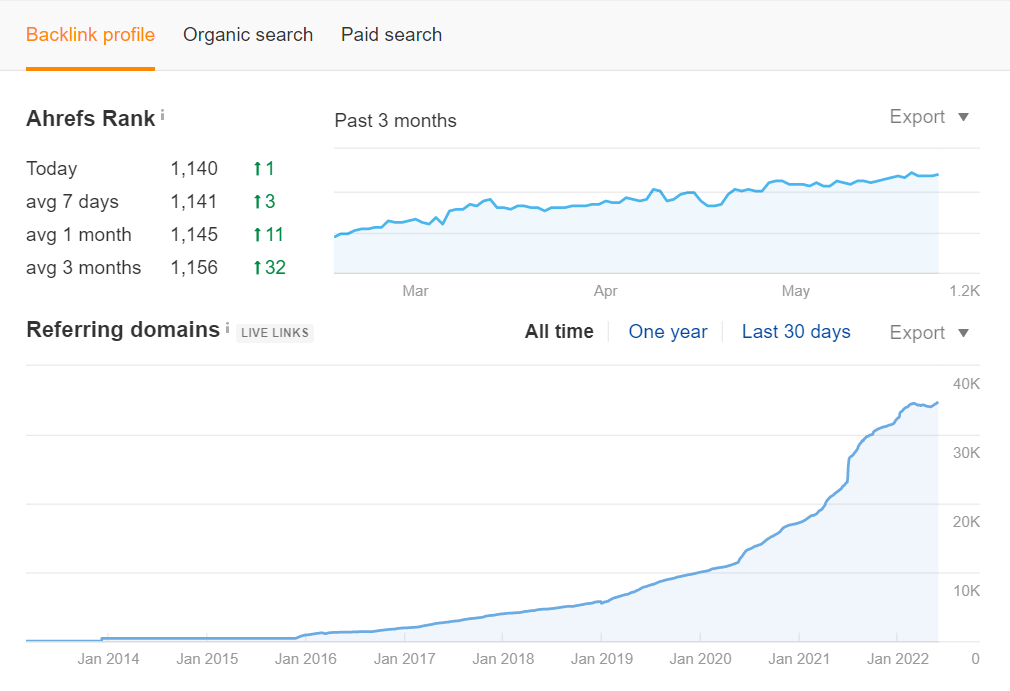

5.3.1. Positive link profile dynamics

5.3.2. Work is underway to increase the authority of the site

- ahrefs.com;

- majestic.com;

- moz.com and others.

- links from main pages;

- links from guest articles on trust sites;

- through links.

5.3.3. External link anchors do not use keywords

5.3.4. Unique domains are used

5.3.5. Links are placed from thematic documents

5.3.6. The scope of donors is expanding

- personal blogs of air conditioner installation masters;

- repair services sites;

- sites of construction and design services;

- sites about technology;

- sites that have a section about technology;

- business sites;

- media platforms.

5.3.7. Tracking of received links is set up

- linkchecker.pro;

- ahrefs.com;

- other.

5.3.8. Development of the link profile is tracked

- pay attention to new links and their source;

- make sure there are no spammy links from competitors;

- control the anchor list.

Blog

All publicationsDistribute Your Content With Collaborator

The work on launching the site is voluminous and painstaking. Many nuances must be taken into account so that search engines like the resource from the first days. Using a detailed free checklist will help to systematize work and take into account all requirements for on-page, technical & off-page SEO.

Yes, you can. SEO checklist for new websites will help to take into account all the nuances and competently optimize the site before the start.

The Collaborator’s onpage SEO checklist contains the most important points of independent site promotion: keyword analysis, site structure compilation, content optimization. SEO technical checklist improves setting up technical elements, and offpage SEO checklist deals with internal linking and backlinks to the site. If necessary, you can add your indicators for verification.

Our search engine optimization checklist can be useful to:

- SEO specialists,

- team leaders of SEO teams,

- marketers,

- webmasters,

- business owners.

Yes, you can. With the help of our SEO checklist, you can make a current analysis of the site to adjust the efforts of the SEO specialist.

Yes. Anyone can use the SEO checklist for free.