Liste de contrôle SEO de base pour l'auto-promotion

Assurez-vous de les appliquer sur votre site. Ajoutez vos propres éléments si nécessaire.

Se connecter ou s'inscrire pour enregistrer vos progrès et créer des check-lists SEO pour de nombreux projets.

1. Liste de contrôle de recherche de mots-clés

1.1. Déterminez l'audience cible

- sexe, âge, état civil

- lieu de résidence

- éducation, emploi

- statut financier et social

- autres données

1.2. Trouvez des mots-clés

1.2.1. Brainstorming

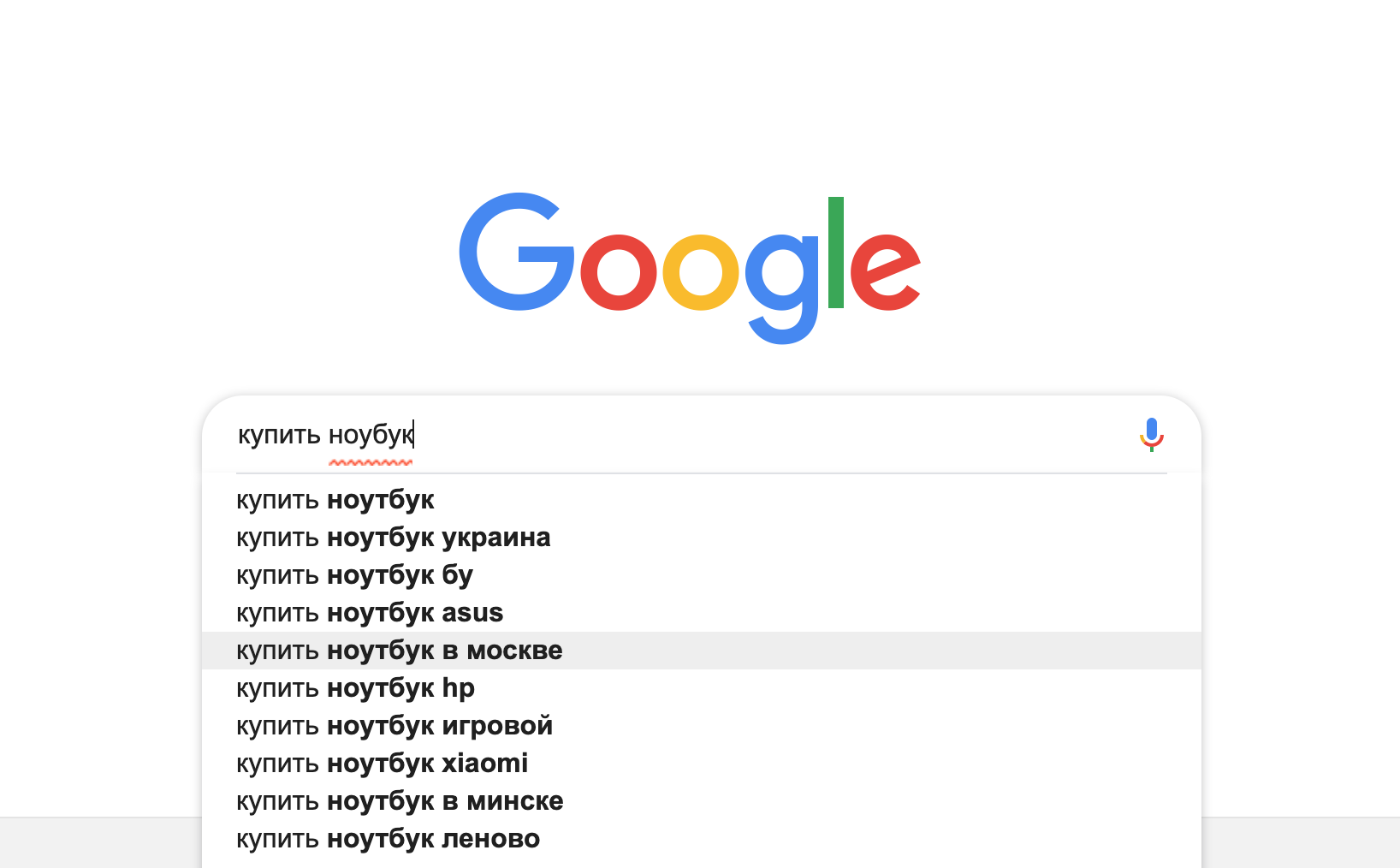

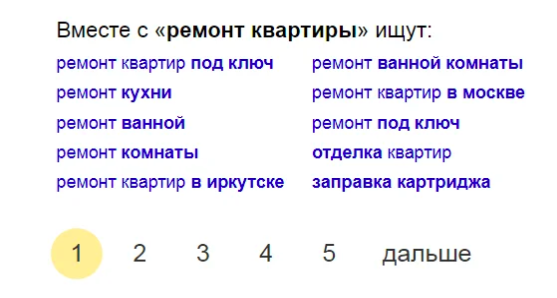

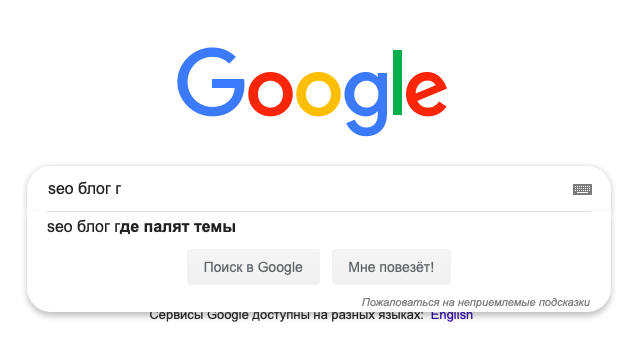

1.2.2. Suggestions de recherche Google

1.2.3. Statistiques et services de sélection de mots-clés

1.2.4. Statistiques de site

1.2.5. Panneaux des webmasters

1.2.6. Mots utilisés par les concurrents

- En analysant le code html (phrases dans les titres, balises méta, contenu) et les liens textuels;

- En parcourant les statistiques ouvertes des visites sur les sites;

- Utilisation de services tiers tels que Serpstat et Ahrefs.

1.3. Développez le noyau de requêtes

1.3.1. Requêtes à un et plusieurs mots

1.3.2. Synonymes et abréviations

- "ordinateur portable"

- "netbook"

- "ordinateur portable" ou simplement "lappy"

- macbook.

1.3.3. Combinaisons de mots

- Pas cher - … - à Kiev;

- Nouveau - Ordinateurs portables - Asus;

- D'occasion - netbooks - Acer;

- Le meilleur - … - du dépôt;

1.4. Vérifiez les phrases clés sélectionnées par brainstorming et combinaison. Les utilisateurs les recherchent-ils ?

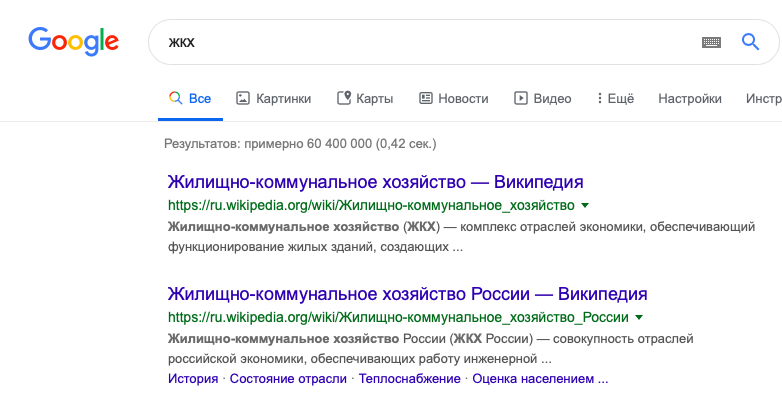

1.5. Voir le nombre de résultats de recherche pour les phrases sélectionnées

1.6. Sélectionnez des phrases clés prometteuses

2. Planifiez ou optimisez la structure de votre site Web

2.1. Composer des phrases clés

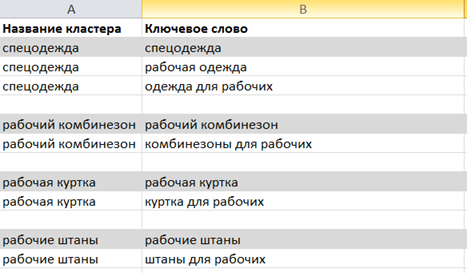

2.1.1. Regroupez les mots-clés en catégories et sous-catégories

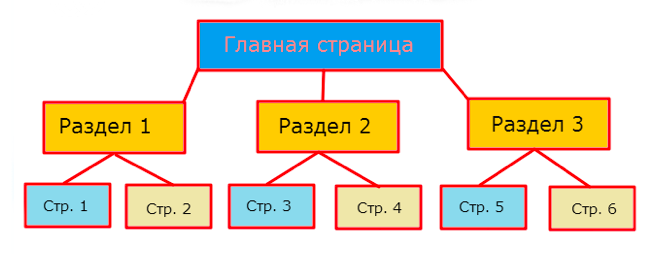

2.1.2. Créer un arbre schématique du site

- lits

- meubles de cuisine

- meubles pour enfants

-

meubles pour le salon :

- tables basses

- armoires

- placards

- coffres

- …

- salle à manger

- meubles rembourrés

- …

2.2. Optimisez la structure du site

2.2.1. Tous les liens de navigation sont disponibles en HTML

2.2.2. Toute page est accessible depuis la page d'accueil avec un maximum de deux clics

2.2.3. Les sections importantes du site sont reliées depuis la page d'accueil

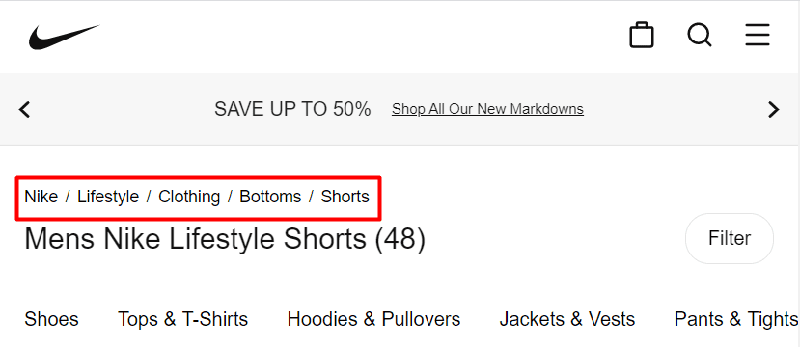

2.2.4. Utiliser des miettes de pain

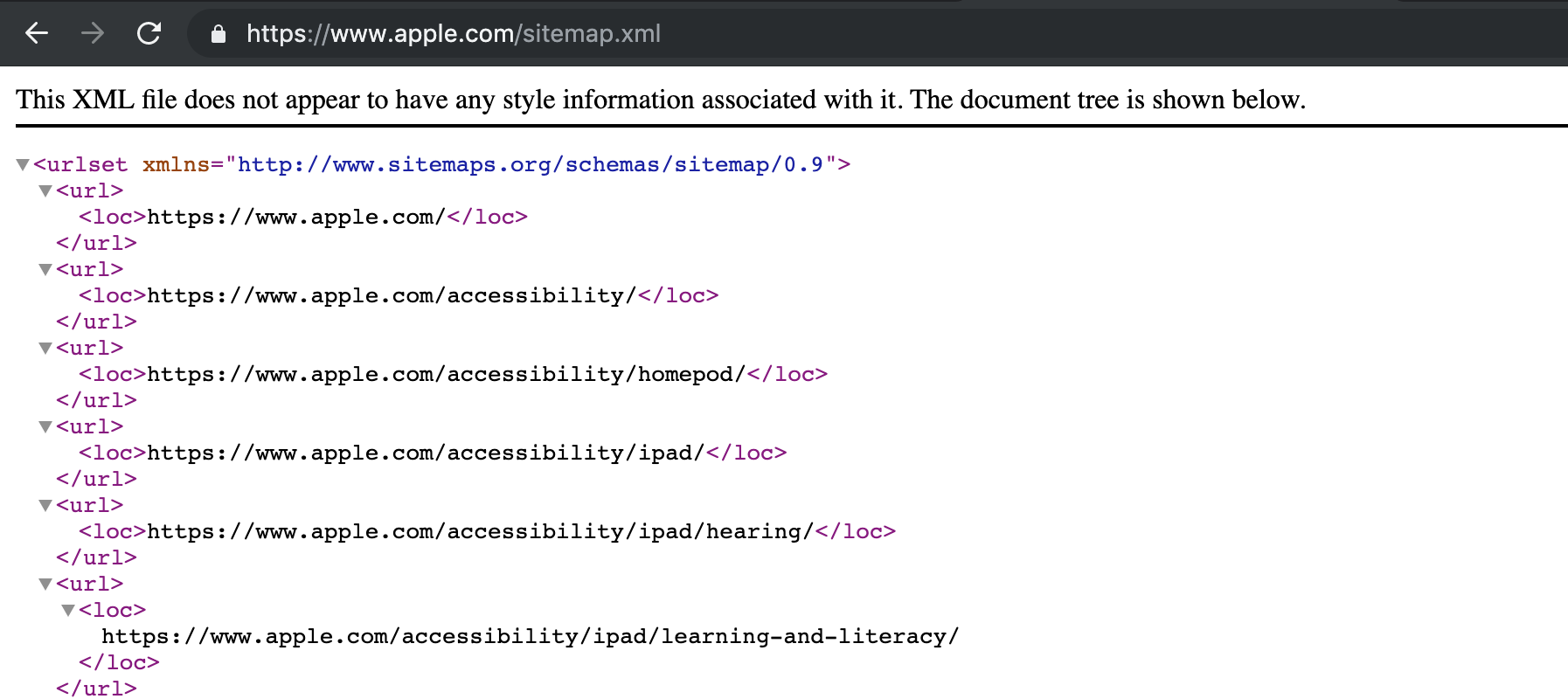

2.2.5. Créer sitemap.xml

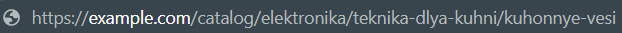

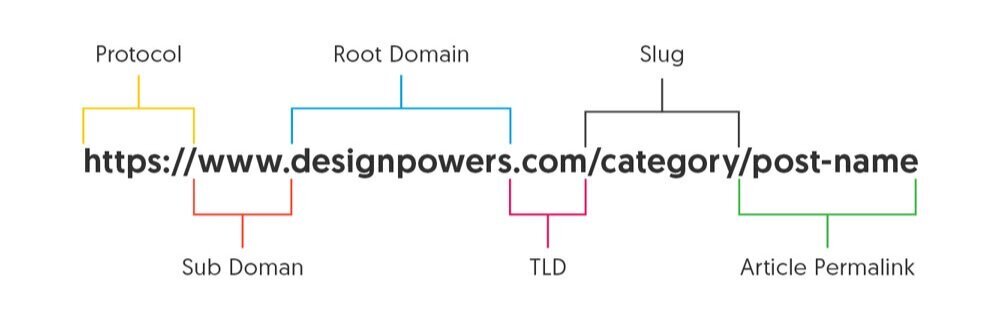

2.3. Optimiser les URL des pages

supprimer

2.3.1. Réfléter la structure du site dans l’adresse de la page

2.3.2. URL conviviales pour les adresses de pages internes

2.3.3. Petites URL de pages internes

3. Liste de contrôle SEO technique

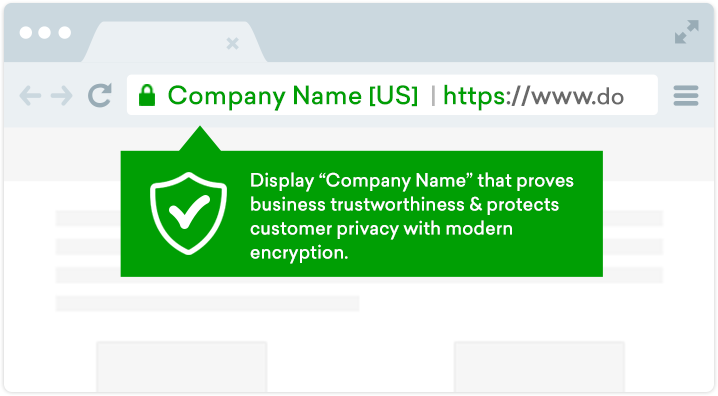

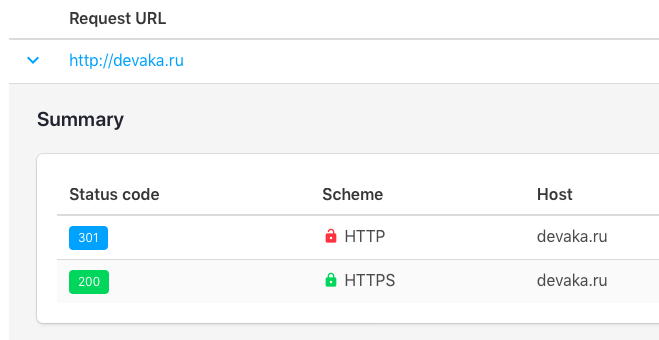

3.1. Configurer HTTPS

3.2. Accélérer le site

3.2.1. La taille du code html ne dépasse pas 100-200 kilooctets

3.2.2. La vitesse de chargement de la page ne dépasse pas 3-5 secondes

3.2.3. Il n’y a pas de débris inutiles dans le code html de la page

3.2.4. Optimiser les images

3.2.5. Configurer la mise en cache

3.2.6. Activer la compression

3.2.7. Configurer les pages AMP

3.3. Optimiser l'indexation du site

supprimer

3.3.1. Bon temps de disponibilité du serveur

3.3.2. Sitemap.xml ajouté au panel webmaster

3.3.3. Javascript ne contient pas de contenu important pour l'indexation

3.3.4. Cadres manquants

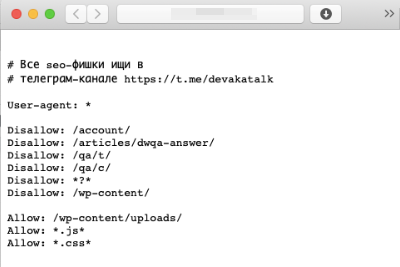

3.3.5. Les journaux du serveur, le panel d'administration et les sous-domaines avec la version test du site sont fermés à l'indexation

3.3.6. Les pages contiennent l'encodage approprié

3.4. Se débarrasser des duplicatas

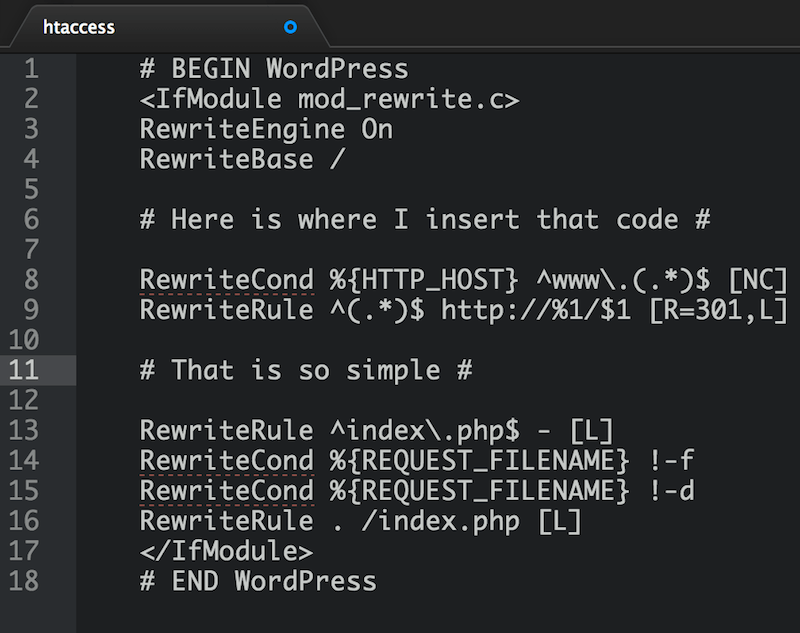

3.4.1. Miroir principal sélectionné (avec ou sans www)

3.4.2. Fichier robots.txt personnalisé

3.4.3. La page d'accueil n'est pas disponible à /index.php ou /index.html

3.4.4. Les anciennes URL redirigent les visiteurs vers de nouvelles pages

3.4.5. Rel=canonical est utilisé

3.4.6. Les pages inexistantes donnent une erreur 404

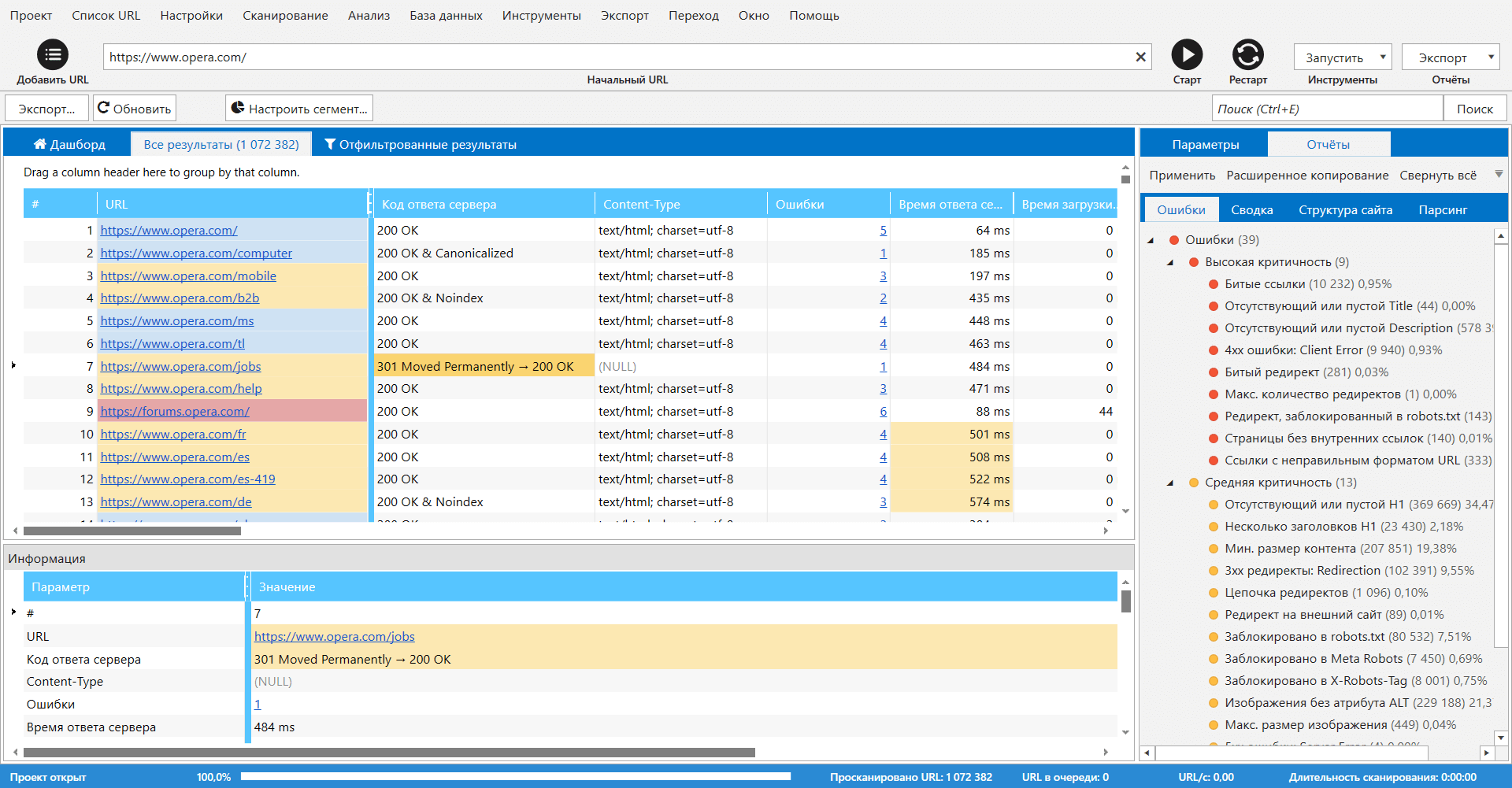

3.4.7. Vérifiez le site avec Netpeak Spider

3.5. Les utilisateurs et les robots voient le même contenu

3.6. Un hébergement fiable est utilisé!

supprimer

3.6.1. Une protection antivirus est utilisée

3.6.2. Une protection contre les attaques DDoS est utilisée

3.6.3. Les sauvegardes sont configurées

3.6.4. La surveillance de la disponibilité du serveur est configurée

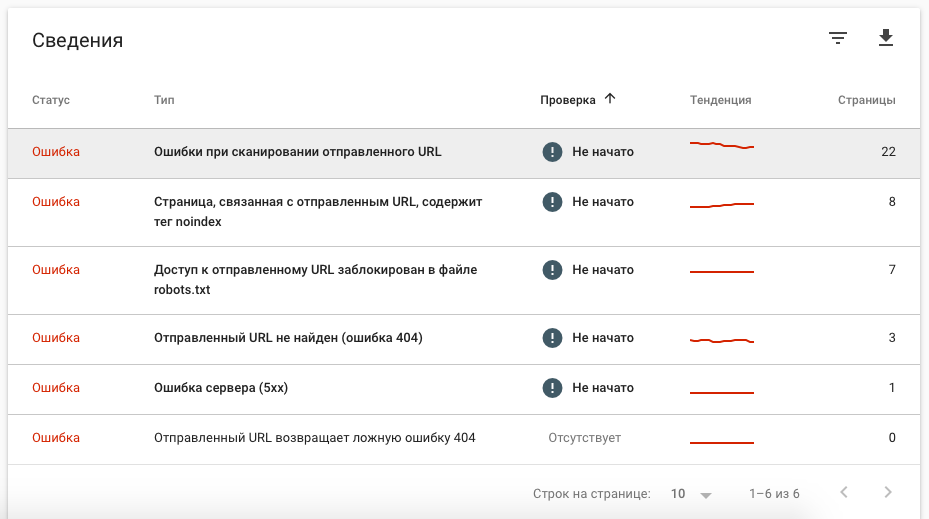

3.7. Le site est enregistré dans le panneau Webmaster

4. Liste de contrôle SEO on-page et contenu

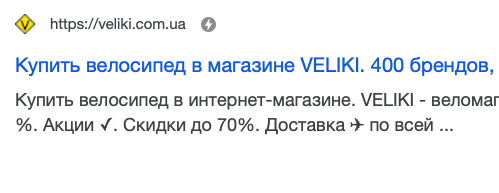

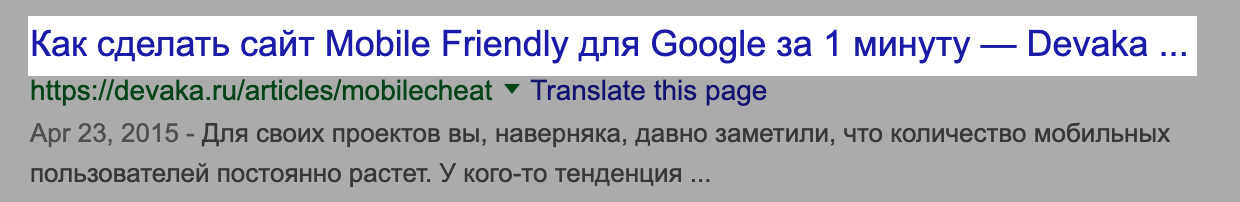

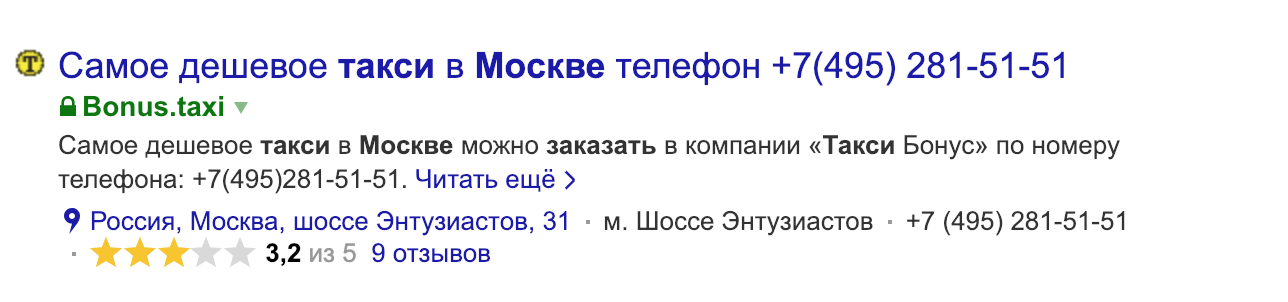

4.1. Optimiser les en-têtes <title>

4.1.1. Utilisez un titre court et descriptif

4.1.2. Afficher le contenu des pages dans le titre

4.1.3. Rendez les en-têtes attrayants pour le clic

4.1.4. Utilisez des mots-clés dans le titre

4.1.5. Insérez des mots importants au début de l'en-tête

4.1.6. Le titre est unique dans le réseau

4.1.7. Des émojis sont utilisés

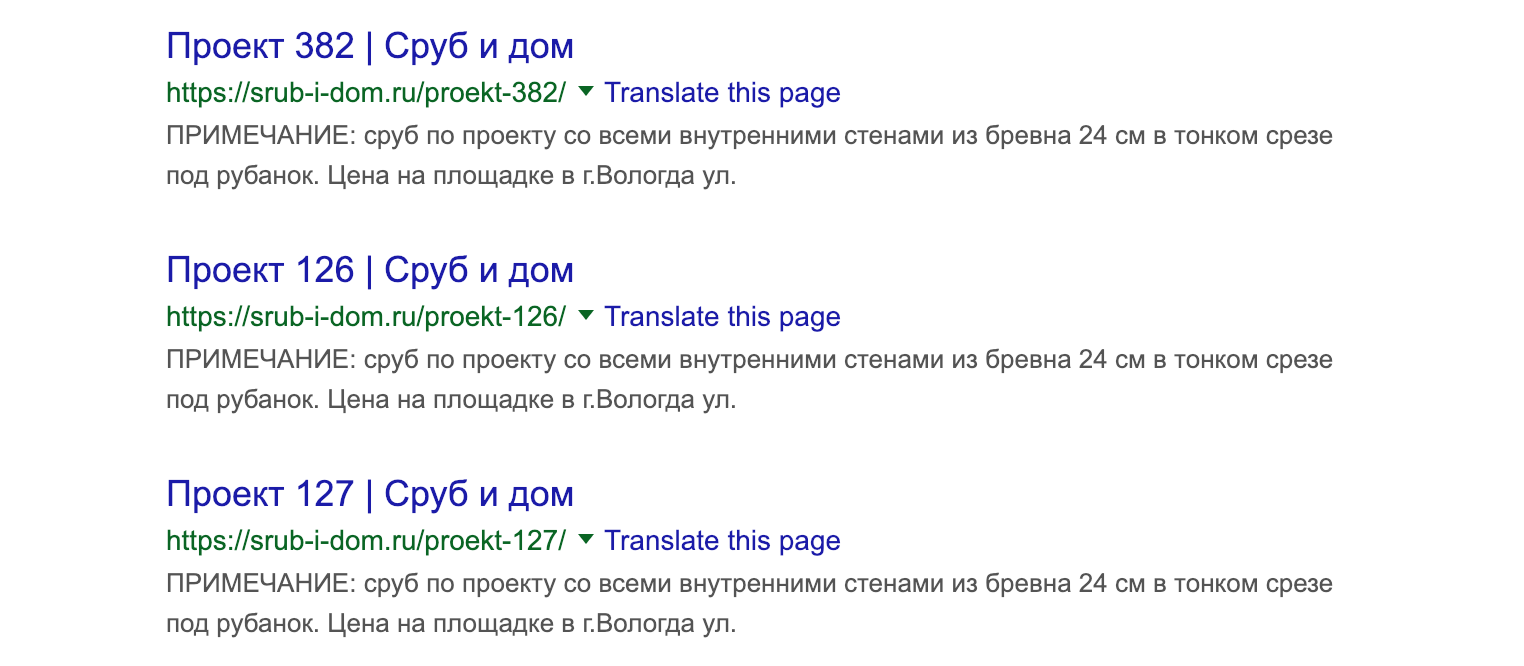

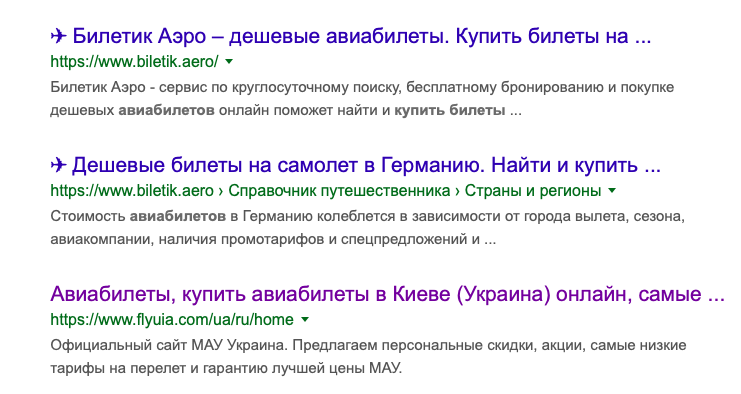

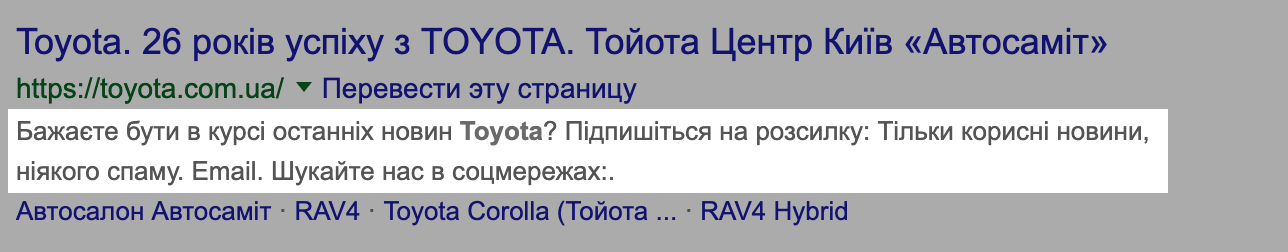

4.2. Optimiser les extraits

4.2.1. Texte dans la méta description ne dépasse pas 250 caractères

4.2.2. La description est rédigée de manière à attirer l'attention et à inciter l'utilisateur à agir

4.2.3. La description contient le mot-clé

4.2.4. Utilisez le balisage de micro-données

4.3. Optimiser le contenu

supprimer

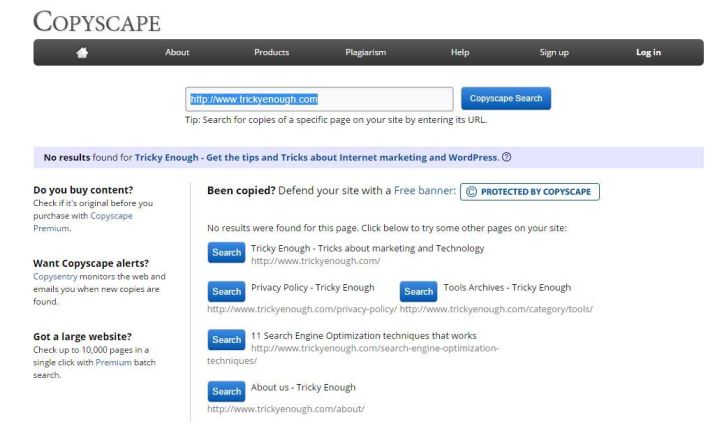

4.3.1. Utiliser du contenu unique

4.3.2. Formater le contenu

4.3.3. Insérer des phrases clés dans H1-H6

4.3.4. La phrase clé se trouve dans le texte

4.3.5. Il n'y a pas de texte invisible

4.3.6. Il n'y a pas de contenu dupliqué

4.3.7. Les mots-clés sont utilisés dans l’attribut alt (s'il y a des images)

4.3.8. Il n'y a pas de publicités contextuelles qui couvrent le contenu principal

4.3.9. Le texte sur la page est composé d'au moins 250 mots

5. Liste de contrôle SEO off-page

5.1. Optimiser les liens internes

supprimer

5.1.1. Les pages ont au moins un lien texte

- Il sera plus difficile pour elles d'être indexées dans les moteurs de recherche, car le robot peut ne pas les trouver ;

- elles auront un faible poids interne, ce qui empêchera leur promotion dans les moteurs de recherche.

5.1.2. Le nombre de liens internes sur la page ne dépasse pas 200

5.1.3. Des mots-clés sont utilisés dans les liens internes

- réduction de prix d'un portable;

- portable Kyiv pas cher.

- acheter un portable de jeu;

- un portable de jeu pour un enfant;

- combien coûte un portable de jeu, etc.

5.1.4. Le principe Wikipédia est appliqué

5.1.5. La navigation principale est accessible aux robots d'indexation sans javascript

5.1.6. Tous les liens fonctionnent (aucun lien cassé) !

5.2. Liens sortants modérés

supprimer

5.2.1. Contrôlez la quantité et la qualité des liens sortants externes

5.2.2. Les liens sortants non pertinents et non modérés sont fermés avec rel=nofollow

5.2.3. Les liens publiés par les visiteurs sont modérés

5.2.4. Il n'y a pas de ferme de liens

5.3. Publiez des backlinks sur le site

supprimer

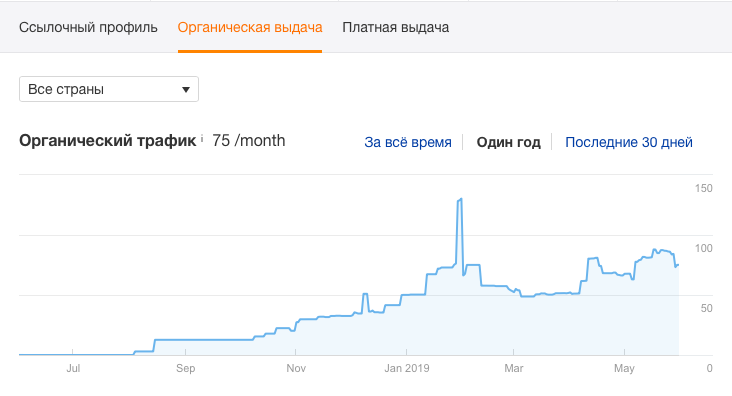

5.3.1. Dynamique positive du profil de lien

5.3.2. Des travaux sont en cours pour augmenter l'autorité du site

- ahrefs.com;

- majestic.com;

- moz.com et d'autres.

- liens depuis les pages principales;

- liens provenant d'articles invités sur des sites de confiance;

- via des liens.

5.3.3. Les ancres de liens externes n'utilisent pas de mots-clés

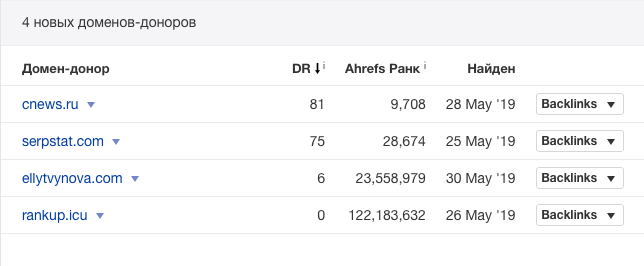

5.3.4. Des domaines uniques sont utilisés

5.3.5. Les liens sont placés à partir de documents thématiques

5.3.6. L'éventail des donateurs s'élargit

- blogs personnels des maîtres en installation de climatiseurs ;

- sites de services de réparation ;

- sites de services de construction et de design ;

- sites sur la technologie ;

- sites qui ont une section sur la technologie ;

- sites commerciaux ;

- plateformes médiatiques.

5.3.7. Le suivi des liens reçus est configuré

- linkchecker.pro;

- ahrefs.com;

- autre.

5.3.8. L'évolution du profil de lien est suivie

- faites attention aux nouveaux liens et à leur source ;

- assurez-vous qu'il n'y a pas de liens spam de la part des concurrents ;

- contrôlez la liste des ancres.

Diffusez votre contenu efficacement avec Collaborator

Le travail de lancement du site est volumineux et minutieux. De nombreux détails doivent être pris en compte pour que les moteurs de recherche aiment la ressource dès les premiers jours. Utiliser une liste de contrôle détaillée et gratuite aidera à systématiser le travail et à tenir compte de toutes les exigences en matière de SEO sur page, technique et hors page.

Oui, vous pouvez. La liste de contrôle SEO pour les nouveaux sites Web aidera à tenir compte de toutes les nuances et à optimiser correctement le site avant le lancement.

La liste de contrôle SEO de Collaborator contient les points les plus importants de la promotion autonome de sites : analyse des mots-clés, élaboration de la structure du site, optimisation du contenu. La liste de contrôle technique SEO améliore la configuration des éléments techniques, et la liste de contrôle SEO hors page concerne le maillage interne et les liens retour vers le site. Si nécessaire, vous pouvez ajouter vos propres indicateurs à vérifier.

Notre liste de contrôle d'optimisation pour les moteurs de recherche peut être utile aux :

- spécialistes SEO,

- chefs d'équipe des équipes SEO,

- marketeurs,

- webmasters,

- propriétaires d'entreprises.

Oui, vous pouvez. Grâce à notre liste de contrôle SEO, vous pouvez faire une analyse actuelle du site pour ajuster les efforts du spécialiste SEO.

Oui. Tout le monde peut utiliser la liste de contrôle SEO gratuitement.